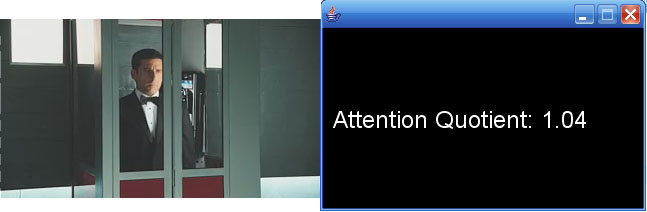

For determining the popularity of different video segments on public displays. It uses a camera and computer vision to notice how many faces are watching the screen. It takes advantage of a shortcoming of the OpenCV processing libraries for the face detection which only detect faces only at very limited angles. It it finds a face you can be assured that they are facing only the screen. Shawn Van Every created a video drop box for people to leave video to be seen on various screens around the floor. We wanted to be able to cull a best of list from the drop box so Shawn VanEvery and I thought face tracking might be interesting. It is currently hardwired to a particular video and only shows the “Attention Quotient” on the screen. If I had more time, I would integrate it with a database of videos and register the attention into that database. This was done as the Tuesday project in the 7 projects in 7 days (5 in 5 for me) festival at ITP.

Tech: I used IP cameras for the video capture. Below is the code:

import java.awt.Color;

import java.awt.Component;

import java.io.File;

import javax.swing.JFrame;

import pFaceDetect.PFaceDetect;

import processing.core.PApplet;

import processing.core.PFont;

import quicktime.QTException;

import quicktime.QTSession;

import quicktime.app.view.MoviePlayer;

import quicktime.app.view.QTFactory;

import quicktime.io.OpenMovieFile;

import quicktime.io.QTFile;

import quicktime.std.StdQTException;

import quicktime.std.movies.Movie;

import vxp.CaptureAxisCamera;

public class VoteWithYourFace extends PApplet{

Movie qtMovie;

MoviePlayer qtPlayer;

Component qtCanvas;

JFrame videoFrame ;

String mediaFileName = “C:\\ALLFOLDERS\\ps3\\video\\others\\get_smart-tlr1_h.320.mov”;

private CaptureAxisCamera video;

private PFaceDetect face;

PFont myFont;

int attention ;

int opportunities;

static public void main(String _args[]) {

PApplet.main(new String[] { “VoteWithYourFace” });

}

public void setup(){

try {

QTSession.open();

System.out.println(“QTSession Open”);

} catch (QTException qte) {

System.out.println(“Sorry NOT: QTSession Open”);

}

try {

OpenMovieFile omf = OpenMovieFile.asRead(new QTFile(mediaFileName));

qtMovie = Movie.fromFile(omf);

} catch (Exception e) {

System.out.println(“Problem opening file”);

}

try {

qtPlayer = new MoviePlayer(qtMovie);

qtCanvas = (QTFactory.makeQTComponent(qtMovie)).asComponent();

} catch (Exception e) {

System.out.println(“Trouble Making Movie”);

}

setBackground(Color.BLACK);

try {

setSize(qtMovie.getNaturalBoundsRect().getWidth(), qtMovie.getNaturalBoundsRect().getHeight());

qtMovie.setTimeValue(0);

qtMovie.start();

} catch (StdQTException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

videoFrame = new JFrame();

videoFrame.add(qtCanvas);

videoFrame.setUndecorated(true);

videoFrame.setSize(getWidth(), getHeight());

videoFrame.setVisible(true);

videoFrame.setLocation(100,100);

video = new CaptureAxisCamera(this,”128.122.151.189″,width,height,false);

face = new PFaceDetect(this,width,height,”haarcascade_frontalface_default.xml”);

myFont = createFont(“Arial”,24);

}

public void draw() {

background(0);

video.read();

face.findFaces(video);

opportunities++;

int [][] res = face.getFaces();

attention = attention + res.length;

String AQ = String.valueOf((float) attention/opportunities);

AQ = AQ.substring(0,Math.min(AQ.length(),4));

textFont(myFont);

text(“Attention Quotient: ” + AQ,10,100);

//image(video,0,0);

// drawFace();

}

void drawFace() {

int [][] res = face.getFaces();

if (res.length>0) {

for (int i=0;i<res.length;i++) {

int x = res[i][0];

int y = res[i][1];

int w = res[i][2];

int h = res[i][3];

rect(x,y,w,h);

}

}

}

}