Navigating Tongues

Angie Kim

Advisor: Sharon De La Cruz

How can we navigate our languages to communicate and interact with each other more inclusively with different feelings, senses and experiences, for a community of ‘beings’?

Abstract

Uncovering the layers gained and lost in translation, Angie creates a series of memories that embrace their travels through different mediums of communication. Her project is a series of practices exploring how to translate different forms of communication with various senses through other language modals to enable us to communicate our feelings: which can be private, personal or public, friendly or political, time-based or instant but with a nature of writing and translating a letter.

What started as a letter about a cherished memory, transforms into a description of an image, then a prompt for AI, to a generated image not so far from the original. Despite all these layers of translation, Angie explores the similar processes of AI image generation and digging deep into your history for a memory. Disrupting the secondary premonition of alternative text, Angie grounds her images in their descriptions as a written title just as valuable to the work as the image itself.

Technical Details

The experiments include both digital and physical experiences and the senses I focused the most are visual, auditory, tactile and verbal languages.

There are mainly five ‘translations’ explored through my practices, which are imagination to visuals, visual to tactile, speech to text, movement to text, and text to image.

For imagination to visual, I used Open source P5.js sketches to enable

users to make 2d graphics. It include a low-fi version of Adobe illustrator’s bruch and pen tool and path modification function with some templates of visuals that I provided using bezier curves and simple graphics.

With those p5 sketches, I conducted small workshop and user testing to translate digital drawings into a tactile graphic. I used swell form printing methods - to play with different styles of outlines and to check the legibility. It turned out the lines were less accurate in terms of delivering information, but people said they loved to ‘touch’ the visuals and it’s nice to have additional touch.

The next translation I was trying was spoken language into text and graphics. I also used p5.js for being open sourced and public. With p5.speech library, users can speak their sentences to write letter and visually interact with the words with their movements.

Amplifying the movement aspect of the translation, I moved on to the next practice which is to collaborate with my sister, who is a dancer to explore the possibility of body language as a poetic communication and decoding its visuals with audio description.

I asked her to write me a letter – handwritten text – and made her not to show it to me but instead dance to describe the contents.

After I got the video, I tried to see what it’s happening and describe it verbally.

After translating her movement into text, I made a haptic object that vibrates with the verbal narration of the translation.

The last part, and the most integral part of my experiment is translating memory into senses. It started with translating texts into my own memory and into visuals. This project, ‘synthetic nostalgia’ is an archive of ai generated photos with prompts and memories from actual letters that I got from my friends.

Some of the letters that I received had parts of words recalling my memories.

I tried to recall those memories and I found that in the process of recall I always add or modify some details. I sometimes looked up for photos that we took for references, which also affected to my visualization of the memories.

And from that, I found that memory translation is actually pretty similar to AI image generations. Receiving a letter is similar to receive a text based prompt. and our brain works as an AI engine, that translate, recall and generate some output based on our own, or the mass database.

I also found that text prompt that are used in AI image generation is actually pretty similar to alt text in web setting as well. Alt text, alternative text, is a description of an image on a web page. With screen readers it helps visually impaired people understand what the image shows, helps search engine bots understand image contents, and appears on a page when the image fails to load.

And Prompt, as well, is a descriptive blurb of an image. I thought that prompt in AI image generation can be served as an alt text of the generated images and started to create some ‘plastic’ memories with the text in the letters.

The created images are archived and shown in two different forms, which is installation and books. I tried two different ways of showing the images.

One was to show the images, with an installation with photos and a photobook, as a part of show in NYC resistor.

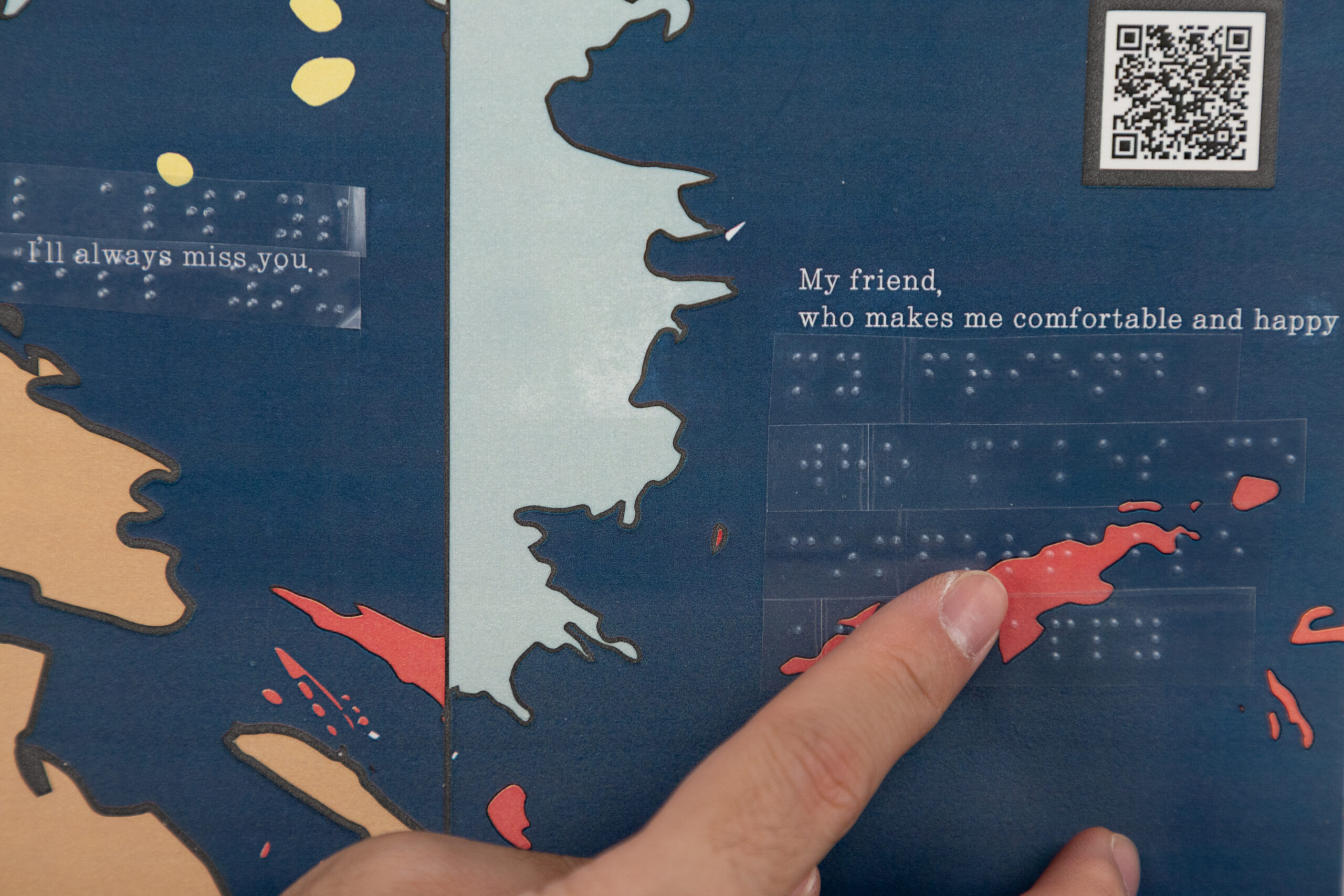

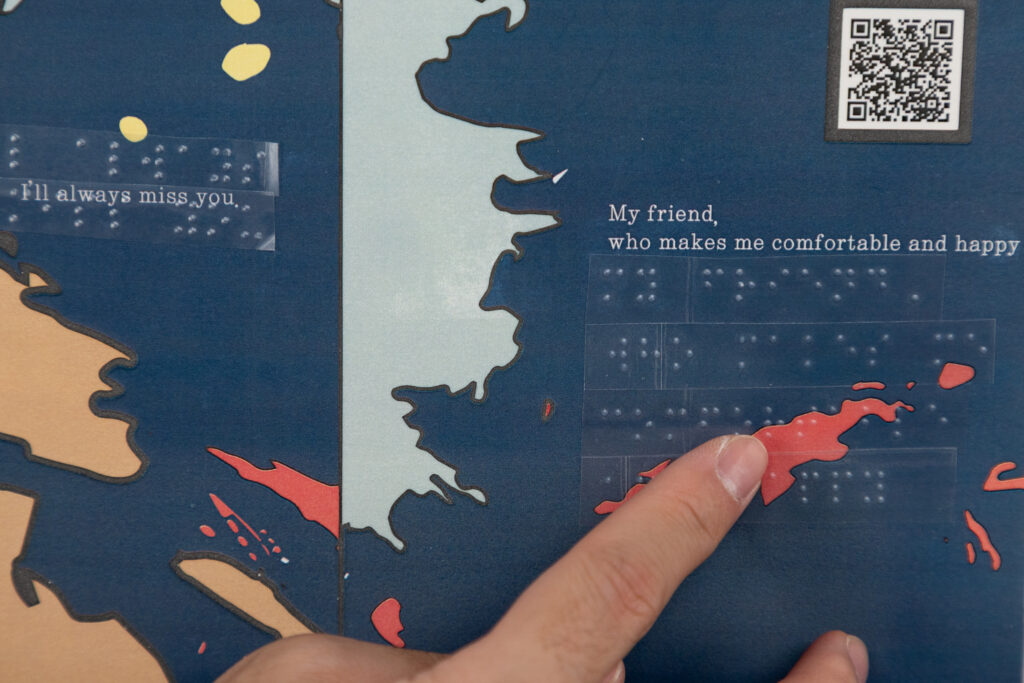

The second one was juxtaposing the prompt, alt text and images at the same time. It was shared through a tactile graphic book with qr codes that are linked to a website. The prompts that are used to generate these images also function as alt text.

By making tactile book, website and qr codes I was able to deliver delivering the ‘memories’ with different sensory options. you might touch and scan the book. you might just see the visual and verbal languages, but people who use screen readers might ‘hear’ the languages.

and with qr codes I was able to present memories not just visually or textually,

but in a format that encompasses sight, sound, and touch,

embracing the wider spectrum of human senses.