Project Jupiter: A VR Mech Pilot Simulator

Weichen Shen

Advisor: Tiri Kananuruk

Project Jupiter: A VR Mech Pilot Simulator is a single player alt control game that combines virtual reality and physical controls for people to simulate driving a mech in a virtual cockpit to perform different mech movements like moving, shooting and engaging in close combat to complete different quests in space.

Project Description

Project Jupiter: A VR Mech Pilot Simulator delivers an immersive experience by combining virtual reality with physical controls. Players step into the virtual cockpit of a massive mech, operating real-world levers, buttons, and throttles precisely mapped to their virtual reality. This seamless integration creates a deep sense of immersion, making players feel like they are truly piloting the war machine.

The project features dynamic mech movements, from deep-space navigation to intense close-combat and long-range firefights with enemies equipped with beam rifles and sabers. The mech’s movement is carefully designed to amplify the scale and power of each battle scenario.

Rather than embodying the mech through the player's body, the simulator introduces a detailed operating system that offers a believable answer to how pilots perform precise and cinematic stunts in motion graphics and anime.. By mastering physical controls, displays, and timing, players engage with a more authentic, skill-based piloting experience.

By blending physical analog interactivity with advanced VR systems and a compelling narrative, Project Jupiter: A VR Mech Pilot Simulator offers a unique and thrilling experience—perfect for mech fans, VR enthusiasts, and anyone ready to command a true mech's cockpit.

Technical Details

Unreal Engine:

Interaction Design( Raw Input and Enhanced Input system): Combine Raw Windows Input from the joystick with custom mapping of the Quest Controllers to create a realistic and intuitive interaction to operate the mech.

Flying AI Enemy:

Behavior Trees designed for different types of enemies with 6 states:

Passive (Patrol), Attack, Investigate, Seeking, Frozen and Dead.

Smart and custom behavior that responds to the player’s action and location: Finding Cover where the player can’t see when health is low; Check corners where the player might hide when they lose sight of the player; Chances to block player’s attack, etc.

Unlike traditional AI that uses navmesh confining the movement on a plane, the project uses Flying Pathfinding System to navigate the player and AI enemies in the unlimited space.

Mech Animation:

Various animations of Enemies (each has unique animations) that correspond to any situation (Idle, move, attack, hit response, equip weapon, etc).

Hand-keyframe Realistic Animations for Mech. Unlike the human body that can be deformed or dynamically angled. The rotation of body parts of Mechs has to follow its own parts. Retargeting the Mech’s IK from the human's IK and adjusting keyframes that make the movement look unnatural by hand.

Tutorial Design

A linear Tutorial of all the controls from turning around, thrust, moving horizontally, moving vertically, Crosshair control, fire laser beam and Shield.

Animated Hologram of the actual Quest Controller that shows the player how to interact.

Highlight Buttons on the Quest Controller Hologram that indicates the player where to interact.

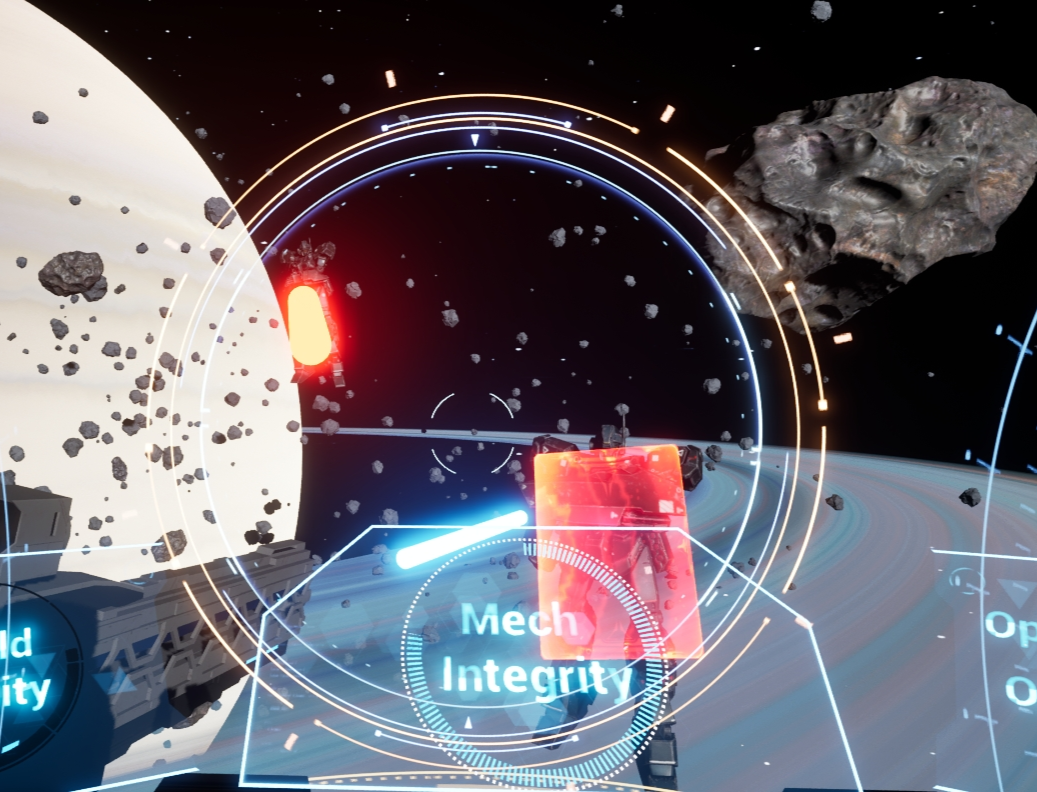

UI Design

Futuristic UI that reveals the advanced technology used for the mech

Dynamic Crosshair that has visual cues when the crosshair is aimed at the target and when hit the target Successfully

Dynamic Enemy detection sign that scans all enemies in the radius, size changing according to the distance and its position relative to the crosshair

Scene Construction:

Dynamic Asteroids fields

Background Spaceship Firing Beam at each other to enhance the intensity of the scenes

AutoAim and Firing system:

Unlike First Person Perspective or Third Person Perspective Shooting games, the player is firing at where the camera is facing and the crosshair is always bound at the center of the camera.

In the cockpit, the player’s look at direction doesn’t affect the position of the crosshair on the screen.

Like other Shooting games that are using gamepads, the Autoaim system is featured in this project to improve user experience.

Maya:

Model and Texture the whole cockpit to make the cockpit believable

Fabrication:

3D Printing: Device that can hold the Quest Controller (compatible with Oculus Touch and Oculus Touch Plus) and connected to the joystick.

Laser Cut: Layered Device that mounts the Joystick on the hands of a chair to recreate a pilot seat in real life.

Research/Context

In mech-themed anime and motion graphics, pilots often perform dramatic, high-speed maneuvers with seemingly effortless control. Yet, there’s a question that's unanswered: how they actually operate the mech. Most motion graphic work avoids this question by introducing the "neuro-piloting"— where the mech moves as an extension of the pilot’s body, controlled by the pilot’s mind. This avoids explaining the mechanics behind the machine’s movements.

Similarly, in mech-based video games, the pilot’s perspective is rarely shown. The focus is on the mech itself, with only brief glimpses of the cockpit—if at all. Players are left without a realistic, tangible system that explains how pilots translate their commands into the mech’s actions.

To fill this gap, I aim to design an interactive control system that provides a believable, skill-based method for piloting a mech—one that moves beyond abstract neuro-controls and instead offers a hands-on, mechanical operation true to the scale and complexity of a mech.