As the circle of light grows, so does the circumference of darkness.

All posts by Daniel B O'Sullivan

Page Ghosts

If you have a web cam you can got to http://itp.nyu.edu/dano/face to hover over a web with other people looking at that some page. Your video image follows your cursor around the page tuning in other video images more sharply when they are close to you on the page. This needs a Flash 10 plugin.

Breath Bra

Breathing is surprisingly underrated. I made this example for students in my Rest of You class to be able see their breathing on their cellphone and to log it over the course of a day. In the course of this I live the dream of wearing a bra on the outside of my clothes during class. Continue reading Breath Bra

Breathing is surprisingly underrated. I made this example for students in my Rest of You class to be able see their breathing on their cellphone and to log it over the course of a day. In the course of this I live the dream of wearing a bra on the outside of my clothes during class. Continue reading Breath Bra

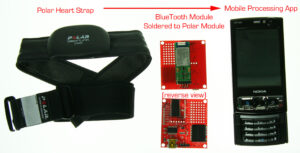

Phone Heart

It is kind of a kick to see your heart beat. Polar is a company that makes lots of stuff for athletes to watch their heart beat. The data usually goes to something like a watch and i think it is pretty hard to get your own hands on the it. I made this example for students in my Rest of You class to be able to use their heart control somthing or just be able to see the same data in their own visualization.

It is kind of a kick to see your heart beat. Polar is a company that makes lots of stuff for athletes to watch their heart beat. The data usually goes to something like a watch and i think it is pretty hard to get your own hands on the it. I made this example for students in my Rest of You class to be able to use their heart control somthing or just be able to see the same data in their own visualization.

Don’t Blink (In Progress)

You look at a screen that works as a mirror reflecting your face. If you blink your eyes, a picture of me thumbing my nose at you comes up but you will never know. Just like you will never know if your refrigerator light is really off. This was done as the Thursday project in the 7 projects in 7 days (5 in 5 for me, … er make that 3.5 in 5) festival at ITP. I am hoping to get back this afternoon to finish it but don’t know if I will.

I am looking for holes in the skin color to find the eyes. When I get back today I will segement out just the holes for the eyes.

Here is the code so far:

Face the Web

Allows people to congregate in a layer on top of your web browser. Using a webcam attached to your computer the application grabs your face and sends it to other people looking at the same web page. Your face is displayed on other peoples’ browser at the place on the page you are most interested in as expressed by your cursor location. The web page becomes a substrate for gathering. In the future I would like to degrade the resolution of faces of people interested in things further away on the page and change to peer to peer architecture for the actual transmission of the images. This was done as the Thursday project in the 7 projects in 7 days (5 in 5 for me, … er make that 4 in 5) festival at ITP. Continue reading Face the Web

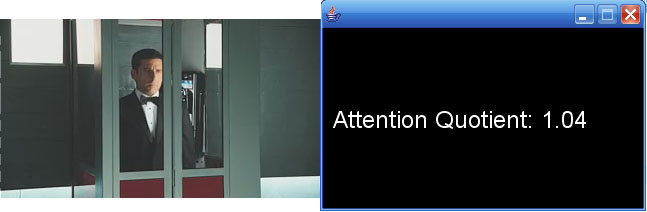

Attention

For determining the popularity of different video segments on public displays. It uses a camera and computer vision to notice how many faces are watching the screen. It takes advantage of a shortcoming of the OpenCV processing libraries for the face detection which only detect faces only at very limited angles. It it finds a face you can be assured that they are facing only the screen. Shawn Van Every created a video drop box for people to leave video to be seen on various screens around the floor. We wanted to be able to cull a best of list from the drop box so Shawn VanEvery and I thought face tracking might be interesting. It is currently hardwired to a particular video and only shows the “Attention Quotient” on the screen. If I had more time, I would integrate it with a database of videos and register the attention into that database. This was done as the Tuesday project in the 7 projects in 7 days (5 in 5 for me) festival at ITP.

Tech: I used IP cameras for the video capture. Below is the code:

Trading Faces –Big Screen Edition

As people stand in front of a mirror, this applications uses face detection technology to find the faces in the video and then display them with just the faces switched. Each person looks normal except they have someone elses eyes, nose and mouth. This far I go before. In this excersize I wanted it to work for a crowd standing in front of the large screen display at IAC, Gehry building. This required that the faces be shared across multiple application so today I did the networking for that. If I had more time I would add an oval alpha mask so the faces blend better. This was done as a Monday project in the 7 projects in 7 days (5 in 5 for me) festival at ITP.

Tech: I used the OpenCV processing libraries for the face detection. I used IP cameras for the video capture. Below is the code: Continue reading Trading Faces –Big Screen Edition

Graffiti Wall

This is a ten foot video screen on the wall a the Liberty Science Center that allow visitors to spray virtual graffiti using light emitting spray cans. Utube Video.

I worked with Tom Igoe, Stephen Lewis, and Joseph O’Connell to develop this. I worked mostly on the video tracking software.

Comicable

C heck it out at: http://comicable.com/

heck it out at: http://comicable.com/

“The Never-Ending Break-Up Make-Up Storytelling Comic Book Generator” (or NEGBUMCSBG as we like to call it ) arose from an interest in storytelling, comics, and the desire to create a shared space that not only allowed participants to create stories but also allowed individuals to locate and connect similar stories, or other similarly minded storytellers. Continue reading Comicable

Physical Computing Book

The computer revolution has made it easy for people with little to no technical training to use a computer for such everyday tasks as typing a letter, saving files, or recording data. But what about more imaginative purposes such as starting your car, opening a door, or tracking the contents of your refrigerator? “Physical Computing” will not only change the way you use your computer, it will change the way you think about your computer-how you view its capabilities, how you interact with it, and how you put it to work for you. It’s time to bridge the gap between the physical and the virtual-time to use more than just your fingers to interact with your computer. Step outside of the confines of the basic computer and into the broader world of computing. Get it.

Trading Glances

Trading Glances allows people to trade glances separated in time. The installation consists of a screen displaying faces streaming by as if the viewer were passing people in the street.

As the viewer watches the other person’s face, the system records their face and precise eye movements. Later their face is added to this stream of faces in the installation and on the project web site. People can go to the site to see who glanced at them and replay exactly how another person’s gaze travels across their face. Ones’ eye movements can betray very private preferences and yet they are usually publicly viewable. This project tries to invade the privacy of the person doing the surveillance.

Xena Footnotes

This is a chat environment that allows fans to congregate around their favorite part of the Xena television show. This interface differes from movie SpaceTime Chat because the clip is broken down into segments which are depicted by the bars of the bar chart. The height of the bar maps to the number of comments for that segment. Comments are distributed synchnonously to other people logged on concurrently and also stored for future users. The software works for all types of streaming media. Special moderators can log on and edit chat. Chat is automatically censored for profanity. There is also a sniffer which detects the appropriate version of this clip to play for the user’s connection speed. I worked with Sharleen Smith, Yaron Ben-Zvi at Oxygen.

Microcontroller Vision

Project Description: This was an experiment to bring the video tracking and video recognition that is very popular at ITP into the realm of very small and inexpensive microcontrollers. It is a continuation of a project that made use of a PIC microcontroller and a Quickcam. With the introduction of very cheap CMOS cameras and very fast SX microcontrollers, this tool could have greater speed and resolution. At the same time a group at CMU developed a commercial kit which uses the same components (better engineering).

Technical Notes: I wrote the software in C for a SX Chip and build the circuitry to connect to a CMOS camera from Omnivision.

Eyebeam Atalier Electronic Poster

Project Description: This was a project to propose a building design for a new Arts and Technology home for the Eyebeam Atelier by Diller and Scofidio Architects.

This was a collaboration with Deane Simpson at the diller+scofidio.

Technical Notes: I designed the system for encorporating DVD video into the 1″ thick poster.

Mirror Play 4-7

Project Description: This project is a continuation of a three previous Mirrorplay projects that experiment with mirrors that selectively capture a reflection.�This iteration still keys out the background but switches between different criteria for digitizing the foreground, stomping, heads, skin, and suddeness.

STOMPING MODE

In the stomping mode, for ground in image is added permanently to the background when the user stomps their foot. This allows a use to compose a collage by placing themselves or holding things in front of the mirror and then stomping when everything is in the right position.

SUDDENESS MODE

In suddeness mode the mirror records the foreground when it suddenly changes. If a person stands perfectly still they will disappear but if they then jump suddenly their image will be recorded. The result of this is a collection of images of people making a sudden transition from a meditative state to a frantic state.

HEAD MODE

In the heads mode, if one person is standing in front of the mirror the software removes their head and floats it around the screen bouncing off the walls.

If two or more people stand in front of the mirror, the software takes the head from one person and places it on the shoulders of the next person.

Typicially people are amused by this and the software keeps a collection of smiling faces and displays them when no one is standing in front of the mirror.

In the skin mode mirror reflects skin. The software blends new skin on old skin until the mirror is covered with various flesh tones and hints of features. This was too disgusting so I dropped it.

The mirror is mounted in the back on a hinge. To switch between “HEAD”, “STOMP” and “SUDDENESS” modes the user deflects the mirror slightly by turning it on that hinge.

Technical Notes: This was written as a Java application using QuickTime for video digitizing.

Internet Television Station

A Series of experiments in combining television and internet technologies.

1) Roaming Close Up

This is a client for viewing streaming video. First a high resolution still image of the studio background is displayed. A small rectangle of full motion streaming video of a zoomed in shot from the studio camera is then superimposed on the wide shot. The rectangle of video moves around on the client’s machine depending on what the camera in the studio is aimed at. Another version had the video leave a trail so that the background would always be updated by the video passing over it.

2) Video Cursor

This is a software system for broadcasting video commentary about very precise part of web pages. This software allows one person to stream an image of themsleves talking in a small window that floats above the web pages of a large audience. The software allows the speaker to move this small video window to a particular area of the web page and have it move to over that same part of the web page of everyone in the audience. It is a little bit like a video cursor.

3) Real Person, Virtual Background

Technical Notes: These were done using shockwave and javascript on the client side, Director applictions for studio control, Java for relaying commands and either Quicktime or Real for streaming video.

The Difference that Doesn’t Make A Difference

Glimmers

At ITP we believe that computational media will make possible exciting new artistic forms. Unfortunately there is not so much evidence to back up that belief. In the arts computers are used to more efficiently create work in traditional linear or static forms. Doubters say that you will always break the spell on audiences imagination when you ask them to interact. We have found some interesting glimmers in our Physical Computing classes at ITP by taking the opposite approach, demanding much more of more parts of the user. I will talk here a little bit about the theoretically background for Physical Computing and then very practically about how we implement it in our curriculum.

Computers for the Rest of You

Merpy Puppets

Project Description: Merpy.com is a children’s Website which features the animated, musical, and interactive stories of several characters by Marianne Petit.� This project set out to build life-size puppets of the Web characters.� The user would face the puppet theater and through manipulating a traditional pop-up advance the story.

Technical Notes: I worked with Marianne to create the life-size motorized puppets.� I built a microcontroller to control them and wrote an authoring tool in Macromedia Director/Lingo for creating sequences of animations for the puppets.

MirrorPlay3

Project Description: This project is a continuation of a two previous Mirrorplay projects that experiment with mirrors that selectively capture a reflection. In previous versions I used the mouse and physical frames to determine the area to be captured. In this case, I wanted to use medium motion as the method of setting the area of the mirror to digitize. The application keys out the unchanging background and ghosts the fast changing foreground. When the foreground stays the same for a while, it gets permanently added to the reflection.

I am trying to install this in the storefront windows in the base of Tisch.

Technical Notes: This was written as a Java application using QuickTime for video digitizing. Once installed, it will use rear-screen video projection.

Shadow Conferencing

Project Description: This is a videoconferencing system that can connect up to twelve people across the Internet. Each user has a camera that is aimed towards them. The camera digitizes an outline of that individual and relays it to the other people in the conference. The camera captures the gesture and the general appearance of the user without the intrusive image detail that many users dislike about ordinary video conferencing systems that take the full video image. Using outlines has the advantage that they can be overlaid on each other without obscuring the other. Instead of the usual split screen grid (as seen, for example, in the opening credits of The Brady Bunch), this allows for more interesting interfaces where users can express themselves with the placement of their outline. Finally this type of conferencing uses much less bandwidth and allows for more people to participate and with better frame rates. Users can therefore participate over slower connections. I have also worked on versions that allow the level of detail to be adjusted from merely outlines to including internal edges and to cartoon colorization.

Technical Notes: I wrote the client or end-user software as a Java Applet using QuickTime for the video digitization. I wrote the UDP server as a Java application. NYU is pursuing a patent on this idea.

Puppet Long Underwear

This is a glove that senses hand movement and controls a puppet over a live Internet broadcast. A microphone input is also used to control the puppet. The puppet control information is delayed and synchronized with the live video stream.

This was inspired by the work of Sarah Teitler & Jade Jossen.

Technical Notes: I used flex sensors connected to a Basic stamp to sense the hand movements and Geoff Smith’s Director Xtra for sensing the microphone volume. I programmed both the broadcasting module and the client module in Macromedia Director/Lingo outputting to Shockwave. I used the Multi-User Server for synchronous networking and the Sorenson Broadcaster for the live streaming video.

BubbleVision

Description: This is a system for television producers to synchronize events on Web pages with events on television. The tests of this system were very successful and are being developed to accompany DoubleDare which is a game show on Nickelodeon.

This was a collaboration with Shannon Rednour, Ray Cha, Evan Baily and Jason Root at Nickelodeon, Viacom Interactive Services

Technical Notes: I wrote the software in Macromedia Director/Lingo outputting to Shockwave. I used the “Multi-user Server” for the synchronous networking.

Trivia/Polling Web Game Engine

Project Description: This system allows television producers to easily create games for the Web that compliment their on-air programming. The system is comprised of several modules. The first is a web interface for writers to create questions for the Web audience. The second module is a web interface for producers to arrange questions into a timeline for a show and to schedule the question playback on the web. There is also a centralized SQL database for storing all the questions and shows. The third module, or web interface, is for the producer to play back the show on the web and keep score. There is also a bot in the playback engine that will automatically choose the best question within a range set by the producer, so the system can run shows continuously. Finally, the last module contains an interface for the end-user that displays the questions, the scores, and the chat of other contestants and relays the users’ answers to the playback engine.

I worked with Ethan Adelman at Oxygen media.

Technical Notes: I wrote all the interfaces in Macromedia Director/Lingo outputting to Shockwave, the middleware in Perl and the database is in MS-SQL.

Dunk Yaron Catapult

This project allows Web users to control elements in a live television broadcast. In this case, the Web audience can activate a catapult that hurls actual objects on to television studio stage.

On the home television we see a young man sitting in a dunk tank reading poetry. When a web user successfully aims at the dunk lever, he is dunked. This is a segment in a late night circus called UTV.

This was a collaboration with Sharleen Smith Yaron Ben-Zvi, Jed Ela, Ruel Espejo and Wayne Chang of Oxygen Media, Convergence Lab

Technical Notes: I wrote both the Web and producers’ interface for picking contestants in Macromedia Director/Lingo outputting to Shockwave. I used the Multi-User Server for the networking. I built the electronics and programmed in Basic the microcontroller that senses the motors in the catapult.

Booty Cam

In most chat environments we rely solely on text. Expression, intonation, and gesture do not get conveyed. As Goffman says, “only what you give out, not what you give off.” This project is an experiment to bring the added context of a person?s expression into a 3D virtual chat environment. In this world, the user can shake their booty in front of the camera and capture an animation that is the sent to all other clients. It also looks at the idea of navigation by similarity. Most 3D worlds use a travel metaphor for navigation. In this world you move yourself into the company of other people with similar booty moves by moving your booty or by stealing other people’s booty moves. This was a collaboration with Lili Cheng and Sean Kelly of Microsoft’s social computing group.

Technical Notes: I wrote the video tracking software for capturing booty movement as a Java Applet. I did the scripting for the Microsoft VWorlds software in JavaScript.

Ghost Cam

Project Description: This is a webcam that only send outlines of fast moving objects in its view. Only after an object has been still for a while does it get added as an image to the background. The viewers can move their mouse to express interest in different areas of the image. The cursor coordinates are sent to the other users and show up as hi-lighted areas of interest.

I created several versions of this application. One version automatically refreshed the image under the users mouse. This webcam was set up in the lobby at ITP with a display showing people in the lobby able to see the areas of interest to web viewers. It also allowed web viewers to shout out to people in the lobby using text to speech.

Technical Notes: The video digitizing software and the server were written as a Java applications.

Big Mosh

This is a prototype for an interactive television show where the interaction is on the scale of a building or an entire neighborhood. For instance, the Upper West Side could play the Upper East Side in a game of volley ball by placing a camera on Central Park South can be aimed northward to include a view of Central Park West on the left of the screen and 5th Avenue on the right of the screen. People living within view of the camera can turn on and off their lights to register with the software. The image from the camera is overlaid with a virtual object and shown live on television. People in their homes can push the object back and forth across the park by simply turning on and off their light switches.

Technical Notes: For the installation I used a poster of some buildings and a wall full of light switches. I wrote this software using Macromedia Director/Lingo and Danny Rozin’s Track Them Colors Xtra.

Space of Faces

This is a conceptual space of all possible faces. As the user contorts a cartoon face, they navigate through the space. Related faces are displayed next to the users face. The user can quickly navigate towards another persons face by clicking on, and thus stealing their feature. This is an interface experiment trying to improve on the travel metaphor used in most spatial interfaces, for instance. 2D Macintosh Desktop, or 3D virtual environments. These break down with the scale and interactivity of the Internet where more people are able to contribute as opposed to merely view material. In traditional interfaces, people place themselves in one spot along the dimensions of x, y, and z. They will be related to their neighbors only along those 2 or 3 dimensions or along some category mapped to those dimensions. Searching for other people becomes as tedious as traveling on foot through an enormous and growing city. Internet search engines avoid this problem by allowing documents to be related by as many dimensions as there are words in the document but they abandon the spatial interface altogether and look more like the old DOS Interface. Space of Faces tried to take the best from these two interface techniques.

Technical Notes: I wrote the web client as a Java applet and the server as a Java application connected using JDBC to a MS-SQL database.

Time Space Chat

This is a computer conferencing system for watching movies. Many people may be watching or listening to the same linear media but only the comments of other people viewing a frame close in time to the frame you are viewing will be displayed prominently. Other comments blur in the periphery. Each person has normal control over the movie (pause, fast forward, rewind) or they can slave themselves to another user’s control.

This software associates a person’s comments with the given moment in the linear media that they were viewing when they made the comment and sends the comment out over the network. The software then filters comments coming in over the network according to how close they are chronologically or thematically to the frame in the linear media that the user is watching. The association works both ways. People who are experiencing the same moment of a clip would naturally be more interested in each other’s comments. Conversely people who like talking about a particular topic might want to be represented by a particular moment of the clip.

This is an improvement over techniques like chat rooms, channels, or threads in conventional conferencing software because: 1. the users form groups without much conscious effort by merely showing a preference for one part of the media to watch and; 2. because associations are along a continuum without the discreet boundaries of chat rooms, channels and threads.

Technical Notes: I wrote this in Macromedia Director/Lingo exported to Shockwave and networked it using the Macromedia�s Multi-user server. NYU is pursuing a patent on this idea.

Ambigraph

This is a wristpiece for studying the objective passage of your day. You subjective memory of the passage of your day is weighted towards moments that pop to your conscious attention. This is a wristwatch attempts to automatically log the landmarks of your day by recording changes in ambient conditions such as heat, light and orientation. Each activity in your day usually has a signature combination of these ambient measurements. The Ambigraph logs the data and uploads it into a display on your computer.

This is a wristpiece for studying the objective passage of your day. You subjective memory of the passage of your day is weighted towards moments that pop to your conscious attention. This is a wristwatch attempts to automatically log the landmarks of your day by recording changes in ambient conditions such as heat, light and orientation. Each activity in your day usually has a signature combination of these ambient measurements. The Ambigraph logs the data and uploads it into a display on your computer.

For instance you can tell how often you went to the bathroom, how long it takes you to walk to work, how much time you spent staring at the computer, talking on the phone, how much you move in your sleep, how long it takes you to brush your teeth. This might be more than you want to know but the theory is that activities where there is a great discrepancy between perceived and actual time either be avoided or pursued depending on how the discrepancy runs.

Technical Notes : The data gathering and storage is using the Basic Stamp with tilt, heat, and light sensors. The visualization was created using Macromedia Director/Lingo.

Slow Glass

Project Description: Inspired by a science fiction short story, the idea is to give glass a memory. If you replace one pane of your window with memory glass, you can capture the view from that pane in the window at every moment. As the window ages, you see the changes that time brings — people, technologies and fashions change, neighborhoods transform and people grow up.

In the installation a user can alter the time shown in the memory pane through a spinning a globe, which serves as the controller.

Technical Notes: I wrote the capturing software as a Java application and used a Basic Stamp for the globe’s microcontroller.

Panoramic Narrative

Project Description: This is an experiment with interactive narrative. Many conceptions of interactive narratives ask the audience to direct the course or change elements of the story. These run the risk of breaking the audience’s spell as the action pauses, turning off writers who don’t want to learn how make decision trees, and bankrupting producers who have to shoot many alternate versions of each scene.

Using panoramic narratives allows one to always construct one linear flow but allow the user to construct a different story depending on where they focus. Writing for this would be more like writing for the theater but possibly with a greater feeling of immersion because the fourth wall is intact.

Technical Notes: This project was shot with an array of digital cameras. Synchronous frames from each camera were then stitched together. I did the automation of this process in Applescript and the stitching in QuickTimeVR Authoring Studio. The playback was programmed in Macromedia Director/Lingo and was originally an installation using a panning television set constructed for my earlier LampPost piece.

Psychology Experiment

Project Description: I programmed an application for testing response latencies as a measure of accuracy. I worked with Dr Brian Corby, Fordham University.

Project Description: I programmed an application for testing response latencies as a measure of accuracy. I worked with Dr Brian Corby, Fordham University.

Technical Notes: The application was written in Director with Xtras added to achieve millisecond timing and to port logged data into standard statistical analysis software

Rock and Roll Hall of Fame Trivia Game

Project Description: I programmed a web-based trivia game to promote the Rock & Roll Hall of Fame Awards Show. The work was done for Chris Hough at Rock and Roll Hall of Fame, Viacom Interactive Services.

Technical Notes: The game was written in Macromedia Director/Lingo exported to Shockwave with a connection Perl/CGI script.

On-Line Football Game

Project Description: I programmed a web-based twitch game to promote NCAA football complete with scoring. Done for GTE as a client of Ogilvy One Interactive

Technical Notes: The game was written in Macromedia Director/Lingo exported to Shockwave with a connection Perl/CGI script.

Mind Probe On-Air

Project Description: I served as the Chief technical architect in the design and implementation of a simultaneous live television and web-based broadcast of a game-show format program utilizing telephony as a controller. I developed a real-time synthetic virtual studio that was controlled by various motion sensors attached to actors. I participated in the concept development and collaborated with 3D designers, actors, writers, and television producers to create this prototype.

This was a collaboration with Der Hong Yang, Matt Ledderman, Sharleen Smith, Jamie Biggar, Leo Villareal and Tracy White.

Technical Notes: The main game control was written in Macromedia Director. The face tracking was done using Danny Rozin’s Xtra for Macromedia Director and custom headgear. The DTMF decoding and call progress were done using pci cards. The virtual world was done created and rendered in Cosmo as VRML. The control of the VRML was done as a Java Applet. All networking was done using TCP/IP socket connections.

Podium

Project Description: A podium to be used as musical instrument. I created a surface onto which users could place various found objects. The identity, position and orientation of the objects, as sensed by a video camera, changed the parameters of a physical model in the VL1 MIDI synthesizer. The notes played by the synth were determined by another musical instrument. I worked on this with Danny Rozin, Geoff Smith and Dominick at Interval Research, Expression Group

Technical Notes: I used Macromedia Director/Lingo with Danny Rozin’s Video XTRA to track the objects. I used HyperMidi to communicate with the MIDI synthesizer.

Networked Musical Sensor Boxes

Project Description: Participated in a team “Pressure Project” to create networked nodes for musical sensors. The idea was to build black boxes where many different types of sensors can be easily plugged and played without much technical knowledge. My task was to develop the software to configure the nodes.

I worked with Geoff Smith, Bob Adams Chris Sung and Perry Cook.

Technical Notes: The boxes were build using PIC Microcontrollers with proprietary network protocol. The configuring software was written in Macromedia Director/Lingo.

Mindprobe On-Line

Project Description: I wrote a Java applet where people can participate in a synchronous chat environment while at the same time competing in a trivia game. I also wrote the game server that controls the game flow, timing and keeps score for all the clients on the network and picks a winner.

This was a collaboration with Sharleen Smith, Jamie Biggar, Nancy Lewis and Tracy White.

Technical Notes:� The client was written as a Java applet and the game control using Macromedia Director/Lingo.� The scorekeeping was written in CGI/Perl.

Virtualizing Glass

This is an augmented reality device in the form of a magnifying glass. It annotates real-world objects in real space. It also serves as an input device and allows users to place virtual annotations in physical space. It as exhibited at Siggraph New York Conference.

Technical Notes: I used VRML for the simulation, Director for the video capture and a PIC chip connected to a Quickcam for the tracking.

Space Shots

Space Shots is an application for navigating virtual tours of real estate. There was a novel interface using synchronized floorplan views and first person perspective. Selection and rotation of nodes in the floor plan view changed the view in the QuickTimeVR file. Conversely, navigation in the QuicktimeVR was reflected in the floor view. I also developed an authoring environment for the quick creation of these CD-ROMs. This was a collaboration with Scott Whitney at Today’s Feature Production Company.

.

Lamppost

I constructed a camera rig for panoramic time-lapse photography to capture a place over a period of time. I stood on the corner of St. Marks Place and Bowery for 24 hours while the camera rig continually grabbed QuickTimeVR scenes. I then built a television set that could do two things: 1. adjust the pan of the QTVR scene by rotating the set on its base; and 2. adjust the time of day through the channel selector. The user therefore controlled the view, both in time and space. This piece was included in the “Elsewhere“ exhibit at The Threadwaxing Gallery. 1997

Balance Diagnosis

3D sensors to measure and display an individual’s ability to maintain their balance at the Hypocrites Project, NYU Medical School.

Web Musical Instrument

Project Description: I adapted the web musical instrument from the Interval Expressions group into a kiosk for the Experience Music Museum (which was then under construction) in Seattle. It was displayed at the Tacoma Art Museum and the Los Angeles County Art Museum.

I worked with Amee Evans, Geoff Smith and Tom Bellman under the direction of Joy Mountford at Interval Research.

Technical Notes: I used Macromedia Director/Lingo and Hypermidi for connecting to hardware synths and the QuickTime Musical Instruments.

The Stick

Project Description: I was a team member in the development of a complex MIDI musical instrument in the form of a simple broomstick. I developed the sensors and software of the first prototype. Artist Laurie Anderson in her Moby Dick performance later used the device, in its fourth iteration.

Bob Adams was the lead of a great team including Geoff Smith, Michael Brook and John Eichenseer Interval Research’s Expression Group

Technical Notes: I built a MAX patch and a connection to the National Instruments MacAdios board that was connected to various pressure sensors on the stick.

VWorlds Prototypes

Project Description: I created several animatics of concept ideas for Microsoft’s VWorld Software. These were created for presentation to Bill Gates at the initial stages of the software development. One design allowed users to go online for help making decisions. Another allowed users to watch television together and a third allowed individuals to write their own obituary and have others vote on their reincarnation.

I worked with Linda Stone and Lili Cheng of Microsoft Research.

Technical Notes: The animatics were made using Macromedia Director.

MindChime

This was an experiment for using a narrative to constrain a virtual environment. I worked with a writer to develop a story about a futuristic environment and an underground resistance. After going through the story, the user has to prove his/her membership to the resistance by taking a test, called the “MindChime”. The user will have a different appearance in the chat environment as a result of his/her test score.

This was a collaboration with the playwright Michael Barnwell and Lily Cheng of the Microsoft, Virtual Worlds Group at Microsoft Research.

Technical Notes: This was created in Macromedia Director/Lingo.

Near There

This is a web application where one person creates a collage using color, circles, squares, lines and text. As an individual makes their collage on the left side of the screen, the most similar collage of previous users shows up on the right side of the screen. As a user begins to intuit the matching mechanism, they alter their drawing deliberately to place their drawing near another person. They then begin to navigate through the vast space of all possible drawings by making one of their own.

This is an interface experiment accommodating applications where many individuals are making contributions. It tries to make the act of contributing a drawing also an act of navigation towards other people.

In addition to standard browser functions like bookmarks, histories and searches, the application also allows people to see who has seen (matched) their collage, and to send email.

Technical Notes: This was created with Beta Shockwave software and Perl/CGI programming using Unix DBMs.

Manage your Money

An application for analysis of home finances for Wall Street Journal, Interactive Edge.

Expression Musical Instruments

Project Description: I headed a project team looking at building musical instruments within the constraints of desktop PCs. The team developed numerous iterations of many instruments.

I used a kaleidoscope application written by Kate Swann, which took input from the computers microphone and gave the desktop different abstract patterns depending on the type of noise in the environment. I then wrote software for a 3-D dancing fork which generated its own appropriate accompaniment. The most successful piece was called the Web (this was before the WWW was very popular). A beam traced radially around the web like an air traffic controller’s screen. Users could add eggs into the web, which would create a sound as the beam ran across them. Users had controls for changing all aspects of the music including pitch, volume, duration, tempo and instrument. Users could also save out compositions. There was also a special interface for more accomplished musicians to create sound palettes for the web.

I collaborated with Joy Mountford and Geoff Smith, Andrew Hirniak, James Tobias, Amee Evans and Tom Bellman at the Expressions Group, Interval Research.

Technical Notes: Most of these instruments were created using Macromedia Director/Lingo and Hype

Expressions Performance

Project Description: I adapted the Web Musical Instrument from the Interval Expressions group for a live performance. A control box with a physical interface allowed for quick multiple changes by a performer not possible with a single mouse. I also slaved the system to a central sound server so it could play with other instruments from the Expressions group.

I worked with Geoff Smith, Bob Adams, Joy Mountford, Michael Brook and John Eichenseer at Royal College of Art for Interval Research

Technical Notes: I used a Basic Stamp for the physical interface, Macromedia Director/Lingo for the interface and connected to a Sample Cell card for the sound generation.

Prisoner Chat

This was a collaboration between the ECHO (the virtual salon/on-line service) and the SCI-FI channel. During the weekly airing the sci-fi cult classic “The Prisoner” series an ECHO chat session scrolled� in a small window at the bottom of the screen. People from around the country telneted in to become part of a national virtual peanut gallery.�

This was a collaboration with Jamie Biggar, Sharleen Smith at SCI-FI Channel, USA Networks Online and Stacey Horn at Echo Communications.

Technical Notes: This was written in Macromedia Director using a Serial Xtra to connect directly with a modem and emulated Telnet.

Red Booth

Project Description: We constructed a booth that was transported to high schools all over the country. In the booth students viewed rehearsals of a television episode. The video paused occasionally to ask the students questions and record video of their replies. Eventually the replies were incorporated into fully produced show that aired on NBC.

I collaborated with Karen Cooper and Tracy Johnson.

Technical Notes: This was written in Macromedia Director/Lingo connected to a video capture card and controlling a Pioneer laserdisc player using a serial Xtra as well as an 8mm Video Camera using a control-l Xtra.

Collage Narrative

An interactive collaging tool for combining QuickTime movies and QuicktimeVR movies into a narrative and sharing them over a network. The idea was to allow people to make stories and move closer to people making similar stories. The interface made extensive use of contextual pie menus.

Technical Notes: This was written in Macromedia Director/Lingo and connected to a server directly via modem (this was done before Internet technologies were widely used.)

Macromedia Showcase: Designers Studios QTVR

A tour of prominent design houses and studios across the country. It used a custom QuickTimeVR display that was included on the Macromedia Showcase CD-ROM. Among the featured studios, Rodney Greenblat’s was the most dense multi-nodal QTVR done to that point. I worked with Cathy Clarke and Lee Swearingen at Macromedia Creative Services for the Macromedia Show Case.

Technical Notes: The photographs were shot on film and digitized on to Kodak�s Photo-CD. They were stitched using MPW and displayed using Macromedia Director/Lingo.

Aeron Chair

This is a machine for taking a high-resolution interactive photograph of the soon-to-be released Aeron Chair by Herman Miller. I built this for Clement Mok Design and collaborated with a mechanic, a photographer and graphic designer to take thousands of still photographs necessary for creating an object movie. The software for displaying the resulting QTVR Movie is written in Director. The display software popped up details of the chair’s features as a user panned and tilted I the chair to examine it. Conversely, it spun the chair to display particular listed feature. This was the most elaborate and high-resolution object movie at that time. I spoke about it at Viscomm and it was widely written about including an ID Magazine award for best presentation design.

Technical Notes: The capturing rig used a Director interface, which controlled two large motors using Alpha Products cards and controlled the shutter of what was then a very unusual and sophisticated digital camera from Nikon.

Dan’s View

I made a pan and time-lapse photograph over a period of 24 hours out a window. The television audience could adjust, with their touch-tone telephone, the angle of pan or the time displayed. People at home could then replace any particular time or pan angle by sending in a computer graphic across a modem.

Technical Notes: The television interface was programmed using CanDo software on the Amiga attached to an Alpha Products box decoding the touch-tones. It was an A-Talk 3 script accepting the transmitted graphics files.

Augmented Reality Chair

This is a chair for overlaying virtual information onto the physical world. This chair, done for Apple Computer, was an augmented reality system that allowed people to extend their desktop to their entire room. Virtual objects could be dragged off the desktop onto any object in the room. A screen hanging off the chair would then overlay that virtual object on that spot in the room. It also allowed a portion of another room to be layered on top of the existing room using QTVR.

Technical Notes: This project was done using a Blue Earth microcontroller, programmed in Basic. The overlay software was created in HyperCard.

Yorb

Project Description: This was an investigation of interactive television where the viewers create the programming content instead of choosing between more professionally produced alternatives. Urban design, architecture and interior design become interface tools as Manhattan cable viewers were invited to navigate around a virtual world using the buttons on their telephone. Within the world viewers encountered pictures, sounds and video that had been sent in by other viewers using modem, fax, telephone and Ethernet. The messages seen on TV are also available for distribution over these networks. I conceived, designed and programmed this automated television program. This work was widely written up and presented at numerous conferences such as Imagina in Monte Carlo and the New York Interactive Association. It aired three nights a week on Manhattan Cable Television and was sponsored by NYNEX. It became a showpiece for the department.

I worked with Red Burns, Lili Cheng, Nai Wai Hsu, Eric Fixler and about a million others.

Technical Notes: V.P.L.s virtual reality software on an SGI machine was used to render the imagery in real-time. The SGI machine was located at the Medical School and the video output had to travel across Manhattan making use of several different technologies with an Ethernet connection going back to control the SGI. The system also made use of a Video Toaster on an Amiga, for mixing video and various boxes for telephone voice and touch-tone telephone input. A Macintosh, running HyperCard was the main program for serving up media.

Diplomat Interactive Fiction

An interactive fiction where the dramatic arc was customized to uniquely frustrate the user based upon previous actions done at Apple Computer, Human Interface Group.

MirrorPlay1

Project Description: This was a project done for Apple Computer, Human Interface Group investigating interactive fiction. After working on other projects including The Diplomat, I thought that any concept involving a branched structure was difficult to make and difficult to watch. Instead, I thought that the interaction should be in the construction of the narrative. To attract ordinary users the interface would need to be as simple as a mirror. I liked the intuitive way things could then be placed and scaled. In a time where people are bombarded with imagery, users could very easily recycle these images, which appealed to me. Most of all, I liked the effect of simultaneously having small close-up video clips roughly juxtaposed with wide master shots.

Technical Notes: This software was written in Hypercard using an XCMD to connect to a Rasterops Video digitizing board.

Object Maker

At Apple I also experimented with object movies. Capturing an an object rather than a scene in an interactive photograph requires a more elaborate mechanism for moving the camera. I built a couple of rigs on my desk but eventually I was put in touch with John Borden of Peace River Films who had already designed such a mechanism for photgraphing Myan artifacts in connection with his important work on the Palenque project with Bank Street College. Joy Mountford and Mike Mills, my bosses at Apple, agreed to commission John Borden to actually build a large scale object maker. I participated the early design of this machine and wrote the software for displaying the resulting movie but the bulk of the design and all the construction was done through Peace River Films. The schematic above was created by John Borden. A person could sit in this rig and a few moments later the machine would create a virtual picture of their entire head. Essentially, this is still the same method used for QTVR objects. This was made into a booth and featured at an exhibit and paper at SIGCHI ’92. Several companies, including John Borden’s company, Peace River Studios, and Kaidan, turned this concept into a product. I worked with John Borden of Peace River Films, Mike Mills, Ian Small, Michael Chen, Louis Knapp and Eric Hulteen.

Technical Notes: The control and display software were both written in Hypercard. John Borden (now president of Peace River Studios) designed and constructed the rig

Apple Computer, Human Interface Group 1992

Paris QTVR

The first series of panoramic images utilizing Eric Chen’s new QTVR stitching technique. All twenty scenes were also captured with sound. I collaborated with Lili Cheng and Michael Chen at Apple Computer Advanced Graphics Group in developing software and interfaces for controlling these pictures and synchronizing them with audio.

Technical Notes: The images were shot on film and then digitized on to Kodak Photo-CD. The panoramas were stitched using MPW The display software was written in Hypercard.

Shock Sculpture

Project Description: This is an experiment with interactive narrative. Many conceptions of interactive narratives ask the audience to direct the course or change elements of the story. These run the risk of breaking the audience’s spell as the action pauses, turning off writers who don’t want to learn how make decision trees, and bankrupting producers who have to shoot many alternate versions of each scene.

Using panoramic narratives allows one to always construct one linear flow but allow the user to construct a different story depending on where they focus. Writing for this would be more like writing for the theater but possibly with a greater feeling of immersion because the fourth wall is intact.

Technical Notes: This project was shot with an array of digital cameras. Synchronous frames from each camera were then stitched together. I did the automation of this process in Applescript and the stitching in QuickTimeVR Authoring Studio. The playback was programmed in Macromedia Director/Lingo and was originally an installation using a panning television set constructed for my earlier LampPost piece.

Project Description: I worked with a team to build a sculpture that would also serve as an evolving hypertext document about Italian Futurist theory. The sculpture was built from wooden balls for nodes and metal rods for connections. Each node had a bar code which, when scanned, would trigger the appropriate text on a computer screen. In addition there was a bin of nodes and rods for a user to add to the sculpture. They could write a comment, print a bar code and add it to the sculpture.

I collaborated with Kate Swann (future wife) and Steve Marino.

Technical Notes: I wrote this in HyperCard with a serial XCMD to connect to the bar code scanner.

Electronic Poster

Project Description: I rebuilt this animated text messaging system installed throughout the World Financial Center. I assessed the needs of the clients, designed the interface, wrote the code, documented the system and trained the users at Olympia and Yorks Arts and Events Department. I built an authoring system for building new screens, a system for porting screens from a database, and a system for scheduling the screens playback.

I worked with David Bixby of ESI Edwin Schlossberg Inc.

Technical Notes: I wrote the system in CANDO on an Amiga connecting to a Hash animation module. The system made extensive use of the Arrex language for interprocess communications. This ran 24/7 unattended for years until it was replaced in 1998.

Window Shows

SEE INSTRUCTIONS, RUBBERHEAD OR HEALING HAND

These were a series of shows that aired on Manhattan Cable Television using touch-tones to direct the video. The first, the Healing Hand, asked people to hold hands via the television. People could call in and have their voice passed on air to give testimony. The second, Marianne Rubberhead was another healing experiment, but viewers also had an opportunity to rotate the orb and find out more about the oracle. Both of these were done in collaboration with Marianne Petit. Interactive Television Tutorial walked viewer through the steps necessary to turn off their television set. Viewers could use their touch-tones to pace the lessons.

Technical Notes: The programming was done in Cando on an Amigo. A Video Toaster was used for the video switching and effects. The 3D graphics were pre-rendered in Swivel 3D. The video was pressed on to a laserdisc and controlled by the Amigo. A box that I built decoded the touch-tones.

Being There with the Melons

This was a drama of an American couple metamorphosed into melons by their television set. Just as they become comfortable in their new form a coffee maker left on threatens their existence. The viewer could look about the room using their touchtone phone as the drama unfolded. It was a linear narrative with an enhanced viewpoint.

Technical Notes: This was HyperCard overlaid on a videodisc. Blackboxs DTMF to ASCII converter decoded the touch-tones. I used the camera rig that I put together for Apple to capture the scene.

Mayor For A Minute

This was a pie chart where each viewer could shift the consensus of how conflicting priorities should be resolved. A computer graphic pie chart filled the screen. Each slice represented a portion of the city. A viewer could then pick a slice and reallocate resources from one slice to another. While the viewer worked on a slice video bytes advocating spending in that area showed through the slice. The idea was to have this video sent in by community groups on a daily basis forming a computer-based television network. After a while, a video face would come out of the pie and editorialize on the reallocation. NYU and Apple Computer cosponsored the project. A prototype was demonstrated at SIGCHI 92.

Technical Notes: This used a videodisc and later QuickTime controlled by HyperCard. The touch-tones were decoded by a BlackBox DTMF to ASCII converter.

Dan’s Apartment

An interactive television show that allowed viewers to virtually navigate through my apartment by speaking commands into their phone. This began as an experiment looking for better interface metaphors than the desktop. By putting it on television, it took on a strange voyeuristic quality. It developed a large cable following and provided me with my 15 minutes of worldwide attention. I did this work as a student at ITP, NYU.

Technical Notes: I shot all pathways through my apartment onto video and pressed them to a laserdisc. I built a box using a Voice Recognition chip from Radio Shack and connected it via the parallel port of an Amiga to AmigaVision software that controlled the graphics and the laserdisc.

Golden Gate Navigable Scene

As an intern for Mike Mills, the creator of QuickTime, I wanted to use QuickTime to depict space as well as motion. We went to the top of one span of the Golden Gate Bridge and shot an interactive panoramic photograph. I demonstrated this picture during John Scully�s keynote address at MacWorld and it went on to become very widely demonstrated at Apple and beyond. This was the beginning of a project called Navigable Movies that eventually became the product QTVR.

This was a collaboration with Michael Chen, Mike Mills, Jonathan Cohen

and Ian Small at Apple’s Human Interface Group under the direction of Joy Mountford.

Technical Notes: I built a rig using HyperCard and some relays to control a camera and take pictures.

Pavlovsk Palace

Traveled to Russia with a team from Apple Computer and The National Art Gallery to systematically recorded all the rooms of a famous summer Palace outside St. Petersburg. I customized the rig that I made at Apple Computer for creating panoramic photography. These were compiled and published on the QuickTime 1.6.CD as the first ever navigable scene, soon to be called QTVR.

I worked with Mike Mills, Mitch Yawitz, Eric Chen of Apple and Susan Massie and Kim Nielsen of National Gallery.

Technical Notes: This used a microcontroller from Radient systems, a pan and tilt head from Pelco and HyperCard software for control and playback. This configuration was documented, built into a toolkit by Apple and sold by Radiant.

Musical Hand

This was a screen-based musical instrument. It used pre-rendered 3D graphics to depict a human hand twisting and turning in space and striking notes in that space. When the hand intersected one of the growing bubbles, the instrument and pitch sets of the notes changed.

Technical Notes: This was created in Macromedia Director/Lingo using a HyperMidi XCMD. The graphics were pre-rendered in Swivel 3D. The sound was created on a Roland U220 synth.

Human Joystick

Project Description: I made a robot that was controlled by the direction in which a person would lean. A camera was attached to the robot and the video was fed back into a monitor in front of the user. A video camera was mounted on the ceiling above the user’s head. If the user leaned forward, the robot would move forward. By leaning to the right, the robot would move to the right.

Technical Notes: The video tracking was accomplished using the Mandala system on the Amiga. The robot control was accomplished using AmigaVision and a custom box full of relays. The robot was constructed from a radio-controlled car.