What capabilities does computational media have for depicting and conveying the experience of our minds? In using the new possibilities of machine learning networks to create media, what should we take or leave from cinema, social media and virtual reality?

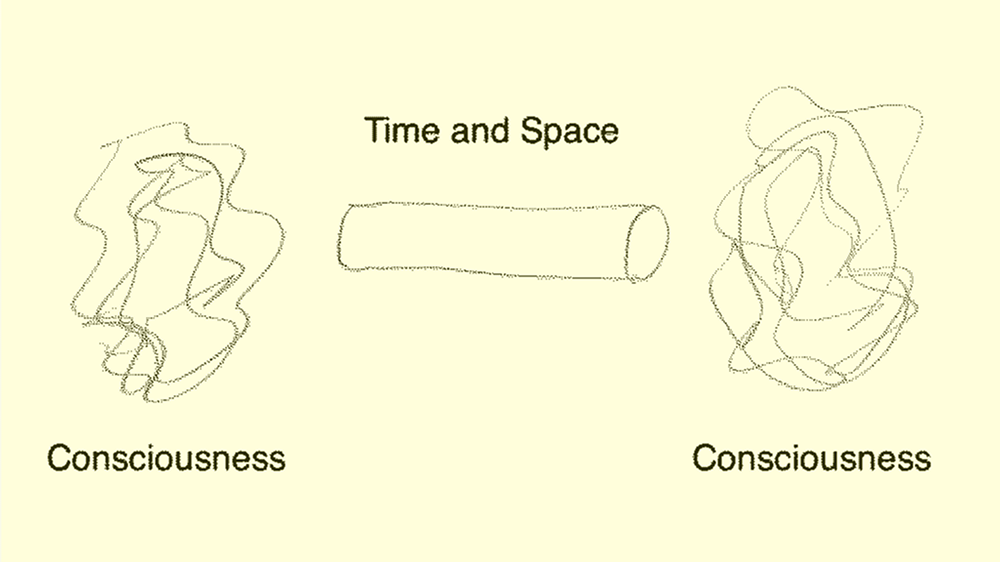

In this course we will start out by turning inward to reflect on how our mind transcends time and space and how artificial neural networks might better capture the multidimensional space of our thought. We then turn to using cloud networking and databases to share our thinking with other people across time and space. Finally we need to flatten everything back into 4D interfaces that, while being stuck in time and space, can reach our embodied, emotional and experiential ways of understanding of the world.

The class will operate at a conceptual level, inviting students’ empirical, psychological and philosophical investigations of the nature of their experience and how to convey it with art and story. It will ask students to look critically at existing computational media’s tendencies to bore, misinform, divide or inflame its users.

But this is also very much a coding class where students will prototype their own ideas for new forms of media first with machine learning models like Stable Diffusion using Huggingface APIs or Colab notebooks, and then with networking and databases using Firebase or P5 Live Media, and finally with 3D graphics using the threejs library. Students can substitute other coding tools but game engines will not work for this class. The coding is in javascript, with touches of python, and is a natural sequel to Creative Computing.