Half-baked ramblings after a day of binge-scrolling Twitter below:

I feel quite overwhelmed by the recent progress in AI and LLMs with GPT-4.

I say this not only about the general breakthrough in science, as the technology is incredibly impressive, but the downstream impacts of what this means for humanity as a whole. All weekend my Twitter feed has been flooded with content on the topic, and the only other time I’ve recognized “the collective” speak to something at this scale was the brief period between the first few cases of COVID and the first week in March when things began to shut down. (Caveat: I might also be a product of my information bubble, and not realize how deep I truly am)

I’m really not sure what this will lead to, and I hope I’m not being dramatic, but I think we’ve just accelerated a fundamental shift in society to the scale of what we’ve seen with electricity, the internet, and mobile phones.

Or maybe it’s all just hype

I’ve also noticed quite a few tweets discussing how this moment relates to ideas of consciousness, spirituality, and religion, all topics quite relevant to my thesis. Through reviewing my proposal feedback, more reading, and personal research, I think I want to experiment with GPT-3/4 LLMs and Voice Processing+Generation to explore the gray area between intelligent machines and anthropomorphizing of non-human entities, while speculating what this may look like in the future.

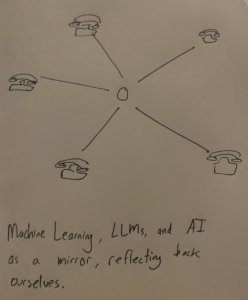

What this may look like (crude 11pm sketch below):

- Use a physical landline phone as an interface between human and “machine”

- Use GPT-3+TTS APIs to simulate a conversation

- Fine-tune/steer these conversations to have distinct personalities

- ????

- Have a successful Thesis presentation

Step 4 is looking to be the most difficult rn. Will sit with the concept a bit more and run it by Sarah and residents for further thoughts.

This concept was also inspired by a Tweet I saw earlier today. Someone managed to run a model similar to GPT-3 locally on device without a need for a network connection, meaning the technology in the movie “Her” just got a whole lot closer. This is a fascinating breakthrough and I want to experiment with it.

Introducing LLaMA voice chat!

You can run this locally on an M1 Pro pic.twitter.com/jetTQ5FxcS

— Georgi Gerganov (@ggerganov) March 26, 2023

Some earnest and satirical thoughts/links/ideas/garbage for reference:

And a few more links to keep here for my own reference:

- https://en.wikipedia.org/wiki/Anthropomorphism

- https://danielmiessler.com/blog/ai-is-eating-the-software-world/

- https://github.com/ggerganov/llama.cpp

- https://github.com/ggerganov/whisper.cpp/blob/c353100bad5b8420332d2d451323214c84c66950/examples/talk.llama/talk-llama.cpp#L177

Class Playlist

Class Playlist

Really nice post, and I appreciate all the screenshots. I’ve also been pretty with reading all the recent progress in AI…so you are definitely not in your information bubble (as I doubt we are in the same bubble lol), and I also don’t think it’s just hype. The speed of progress is just so crazy.

with reading all the recent progress in AI…so you are definitely not in your information bubble (as I doubt we are in the same bubble lol), and I also don’t think it’s just hype. The speed of progress is just so crazy.

Things people had comfort in that GPT-3 still couldn’t do a month ago, GPT-4 is now doing it perfectly this month (plus this ability existed in the lab way before GPT-3 was released). I’m not really in the engineer or expert bubble, but I see a lot of implementation on the humanities sides on my social media platforms.

My ability to easily write like a highly educated scholar with GPT’s help in 1 second has shocked and depressed some of my Ph.D. friends. I already removed Google Translate from my bookmark (been there forever, and thought would never be replaced), after using the ReaderGPT extension for one day.

I’ve heard standup comedian’s testing results on GPT significantly improve within a month. Famous comedians who felt confident AI wouldn’t replace them last month are now claiming defeated (not on asking GPT to write an entire joke but to ask more specific questions to structure a joke, which turns out to be way more impressive than something many would have thought of themselves).