Winnie Yoe

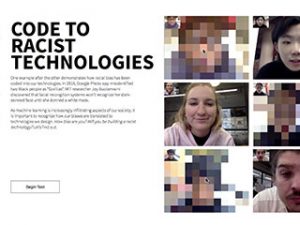

"Code to Racist Technologies" is a project about implicit racial bias and colorism, and a subversive argument against technologies developed without thoughtful considerations of implications.

https://www.winnieyoe.com/icm/racist-webcam

Description

“Code to Racist Technologies” is a project about implicit racial bias and colorism. It is also a subversive argument against technologies developed without thoughtful considerations of implications. The project is inspired by Ekene Ijeoma’s Ethnic Filter, Joy Buolamwini’s research “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification” and Dr. Safiya Noble’s talk “Algorithms of Oppression: How Search Engines Reinforce Racism” at Data & Society.

As machine learning is increasingly infiltrating aspects of our society, it is important to recognize how our biases, in this case, implicit racial bias, are translated to technologies we design, and how that could lead to drastic and often detrimental effects.

The project, in form of an interface experience is two-folded. User first completes an implicit bias association test, which I partially adapted from a test developed by researchers from Harvard, UVA and University of Washington. Once completed, user enter section two, where they find out their probability of developing a racist technology and how this technology they develop will affect people of different skin tones. What the user does not know is that none of their test results are recorded. In fact, their test result always shows that they prefer light-skin relative to dark-skin, with a random percentage from 80-90% that they will develop a racist technology.

Initially, the project only consist of section 2, a “racist webcam” that isolates skin tone from the background, determines where the skin tone falls on the Fitzpatrick Skin Type Classification, then map the value and change the degree of pixelation in the user's webcam capture. The darker your skin tone is, the more pixelated your video appears, and the less visible you are. The layout of the program is designed that each time it runs, the user’s video capture will be shown alongside eight pre-recorded videos. The juxtaposition of the user’s experience with the other participants’ heightens the visual metaphor of the effects of racial bias on one’s visibility and voice.

My goal for this project, and the reason why I added section 1, is because I hope that users will realize all of us are bias, and it is only with a very conscious awareness that we will stop creating racist technologies that bring detrimental effects.

What is the code to racist technologies? The code is you and me, especially the you and me who assume because we are “liberal” and “progressive”, we are not or will not be a part of the problem.

Classes

Introduction to Computational Media