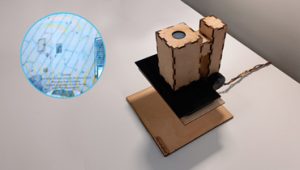

An installation and performance involving percussion objects hung from a ceiling, rhythmically resonated by solenoid motors.

Eamon Goodman

Description

zoom link:

https://nyu.zoom.us/j/91942675074?pwd=Sm13YzQyVm9abEFUOXlFdUsrSk8rUT09

GO TO

https://midi-sender.herokuapp.com/

OR CLICK PROJECT WEBSITE TO PLAY THE DRUMS YOURSELF!

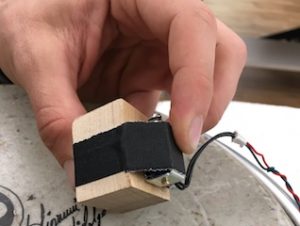

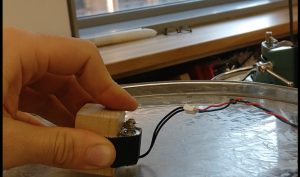

I have collected, arranged, and hung 7 percussive and sonic objects in and array around the listener's ear, be it a human or electronic eardrum. To each object is attached a solenoid motor which will strike the object, and I will control this striking both live with buttons and by creating rhythmic MIDI clips in Ableton Live. I'll then explore the vocabulary of sounds possible with my room-sized instrument, incorporating it into musical performance, perhaps on its own, played and manipulated by multiple people, and with other sound sources, for instance a pitch-detecting harmonizer I created, or just an acoustic instrument like the bass clarinet. If I have time and luck I'll make it possible for spectators to trigger the sculpture over the web.