Devices that enhance meditative experiences in both remote and physical locations, acknowledging that meditation is a communal, multi-sensory practice.

Eden Chinn, Lucas Wozniak, Mee Ko, Rajshree Saraf,

Description

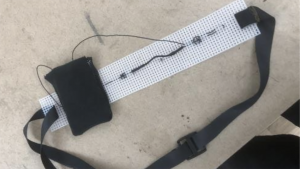

The user wears a belt with a stretch sensor attached, measuring the user’s breath. As the stretch sensor moves with the expansion of the chest, its data is recorded and utilized to manipulate remote and physical objects. There are two variations on this output for our project:

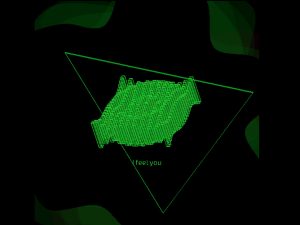

REMOTE/SERVER COMMUNICATION: Different people can wear breath sensors in different locations. Together, their breathing patterns create an output on p5. In our p5 sketch, the breathing is signified by a feather animation moving up and down. Both users' breathing patterns create this change collaboratively.

PHYSICAL INSTALLATION: The stretch sensor allows breath to become a controller that manipulates physical phenomena. An installation of yarn pompoms is suspended from a frame and moves in reaction to the wearer’s inhalation and exhalation.

Zoom link:

https://nyu.zoom.us/j/99001077668

p5 link:

https://editor.p5js.org/mnk2970/sketches/ylgyvcaia