Eric Wenqi Li

Browser LAB is a playground to experience novel capacity of the browser through playing with virtual physical attributes of browser windows, like size, position, color, layout, and sound.

https://www.wenqili.com/browser-lab

Description

We like web pages and web applications. The experience of the modern web browsing is within a browser window or a window tab, which indeed is an user-centered and practical service design.

Web pages are like paintings, the browser window is the canvases and pigments of the paintings. We focus more on the contents made out of canvas and pigments, but we might rarely notice the canvas and pigments themselves also have great stories to tell.

Browser lab is an experiment project that treats browser windows as the contents of expression, the material of design and the storyteller of emotion, not only the canvas. In browser lab, instead of interacting with HTML elements within only one window, users build a connection with the browser windows themselves directly. It's a playground to gain more knowledge about browser window through playing with its virtual physical attributes, like size, position, color, layout and sound.

In browser lab, 3 scenes were built separately to show the novel experience under the essence of chrome browser.

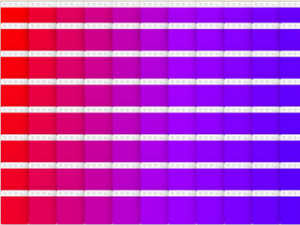

The first scene is a color grid layout to play with multi-window interfaces, trying to experiment the color and sound of windows, and how they might be organized as a group.

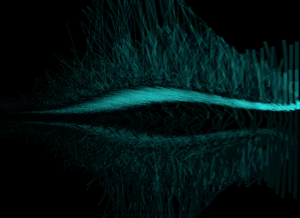

The second scene is a touch interaction with one window, trying to experiment the emotion and physical connections between the browser and human beings.

The third scene is a browser window overlay sculpture, trying to experiment capacity for storytelling of browser windows. More scenes and experiment are being added.

Classes

Hacking the Browser, Interactive Music