Mary Notari

A piece of music is generated using a comprehensive dataset of the past 35 years of mass shootings in the US compiled by Mother Jones as the score in an attempt to answer the question: how can music move us?

http://www.marynotari.com/2018/03/28/interactive-music-final-project/

Description

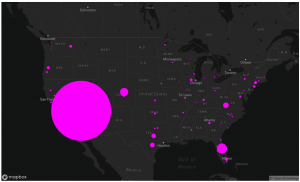

Since first publishing a report in 2012, Mother Jones has been continuously updating an up-to-date spreadsheet of dates, locations, casualties, and other metrics for each instance of a mass shooting that has occurred in the US since 1982. This project was a result of Yotam Mann's class, “Interactive Music.” We were challenged to imagine and perform a completely novel musical score––namely, one that did not use traditional music notation. To that end, I have conceived of this dataset as my musical score. Each column of data-points corresponds to different audio events within a browser-based sketch. I use Tone.js and Moment.js libraries to generate the audio and time it out. Mozart's “Requiem” provides the basis for the chord progression. The audio events occur in concert with a p5 animation over a map of the US, with the shootings visualized as ellipses sized according to casualty counts. Users may hover their mouse over each ellipse to receive a detailed description of the shooting as provided by Mother Jones. After 3 minutes of continuous play, a button with the words “Stop this” begins to fade in. The song and animation will loop infinitely unless the user clicks the button, which will lead them to a 5calls.org page about anti-gun-violence advocacy. Like the shootings in real life, nothing will change unless those who are able to take action. The reasoning for this feature is to connect the function of the piece to its conceptual core: what is the point of aestheticizing data this fraught? Can there be a tangible connection between aesthetics and action? How can music be used to make subjects that might otherwise be paralyzing and overwhelming accessible and knowable? Put another way: how can music move us?

Classes

Interactive Music