Aaron Montoya-Moraga, Philip J Donaldson

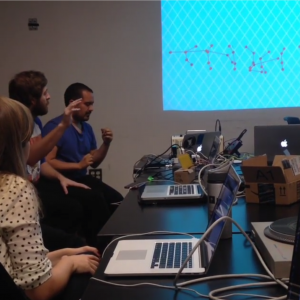

We have created a way to allow users to manipulate music with simple gestures

http://pancake.wtf/2015/12/02/icm-final-update-12115/ // http://www.aaronmontoyamoraga.com/?p=636

Description

Music has been a large motivating factor for both Aaron’s and my work thus far at ITP. For this project we sought to explore how people might interact with sound in a gestural environment, and hopefully made music a little more fun and approachable for those who haven’t played musical instruments. Our project gives users two new instruments to experiment with, the Kinect and Leap Motion sensors. By simply gesturing over the instruments, users will begin to uncover all the music they can make with just a twist, flick or roll of the wrist.

The project at it’s core works by sending raw distance data from the sensors into our programming environment. From there we parse the data and send it to Max for synthesis. The Kinect and Leap Motion are powerful pieces of hardware which make them ideal choices for supporting sound manipulation. Each device can read so much about your body or hands it was great getting to experiment with gesture to create instruments which would make sense to the user. This work has opened the door for experimentation with interfaces not traditionally created for musical expression. We are looking forward to continuing this work to create unique instruments which sound great and are fun to play. Enjoy!

Classes

Introduction to Computational Media