Net-Natyam is a hybrid training system and performance platform that explores the relationships between music, machine learning, and movement through electronic sound composition, pose estimation techniques, and classical Indian dance choreography.

Ami Mehta, David Currie, ,

Description

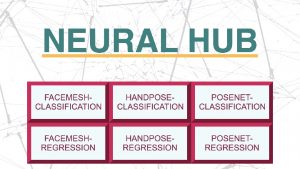

Bharatanatyam is a form of classical Indian dance that involves using complex footwork, hand gestures, and facial expressions to tell stories. The dance is traditionally accompanied by Carnatic music and an orchestra consisting of a mridangam drum, a flute, cymbals, and other instruments. Net-Natyam uses three ml5.js machine learning models (PoseNet, Handpose, and Facemesh) and a webcam to detect the movements of a Bharatanatyam dancer and trigger a corresponding sequence of electronically composed sounds.