Max Horwich

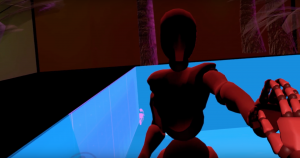

A voice-controlled web VR experience invites you to sing along with the robotic choir

https://wp.nyu.edu/maxhorwich/2018/04/30/join/

Description

Join is an interactive musical experience for web VR. A choir of synthesized voices sings from all sides in algorithmically-generated four-part harmony, while the user changes the environment by raising their own voice in harmony.

Inspired by the Sacred Harp singing tradition, the music is generated in real time, based on Markov chains derived from the original Sacred Harp songbook. Each of the four vocal melodies are played from the four corners of the virtual space toward the center, where the listener experiences the harmony in head-tracking 3D audio. A microphone input allows the listener to change the VR landscape with sound, transporting them as they join in song.

While the choir is currently programmed to sing only in solfege (as all songs in the Sacred Harp tradition are usually sung for the first verse), I am in the process of teaching the choir to improvise lyrics as well as melodies. Using text also drawn from the Sacred Harp songbook, I am training a similar set of probability algorithms on words as notes. From there, I will use a sawtooth oscillator playing the MIDI Markov chain as the carrier, and a synthesized voice reading the text as the modulator, combining them into one signal to create a quadrophonic vocoder that synthesizes hymns in real time.

For this show, I present to show Join in a custom VR headset — a long, quilted veil affixed to a Google Cardboard. Rather than strapping across the user’s face, this headset will be draped over the head and hang down, completely obscuring their face and much of their body. After experiencing the virtual environment, participants are invited to decorate inscribe the exterior of the headset with patches, fabric pens, or in any other way they see fit — leaving their own mark on a piece that hopefully left some mark on them.

Classes

Algorithmic Composition, Electronic Rituals, Oracles and Fortune-Telling, Expressive Interfaces: Introduction to Fashion Technology, Interactive Music, Open Source Cinema