real-time timbre control using machine learning powered pose detection

, Alex Wang

Description

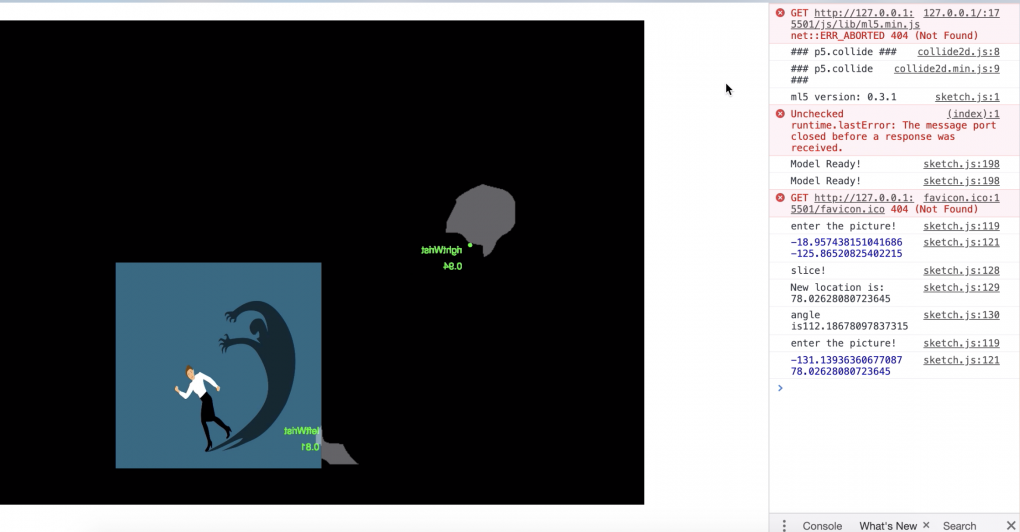

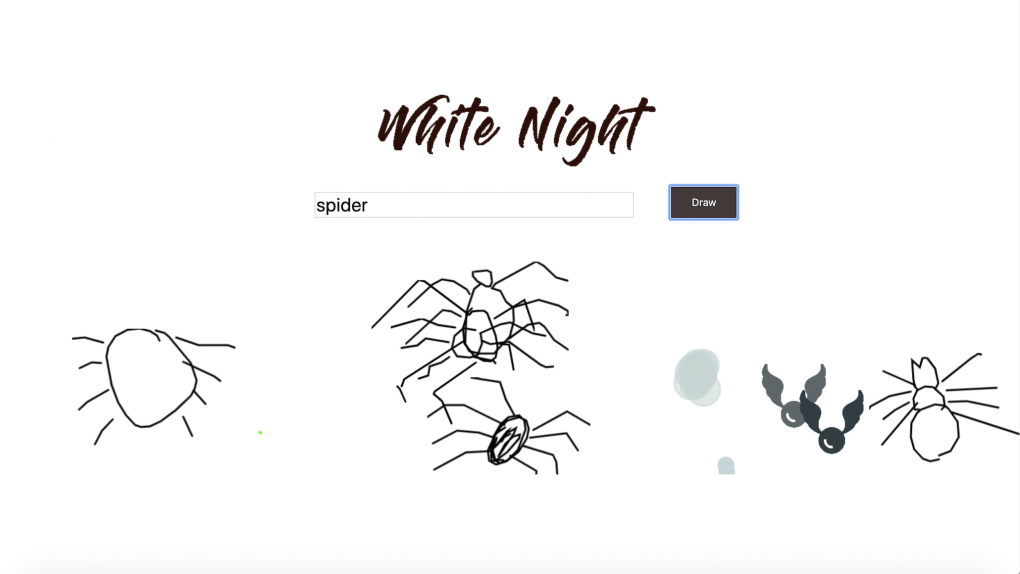

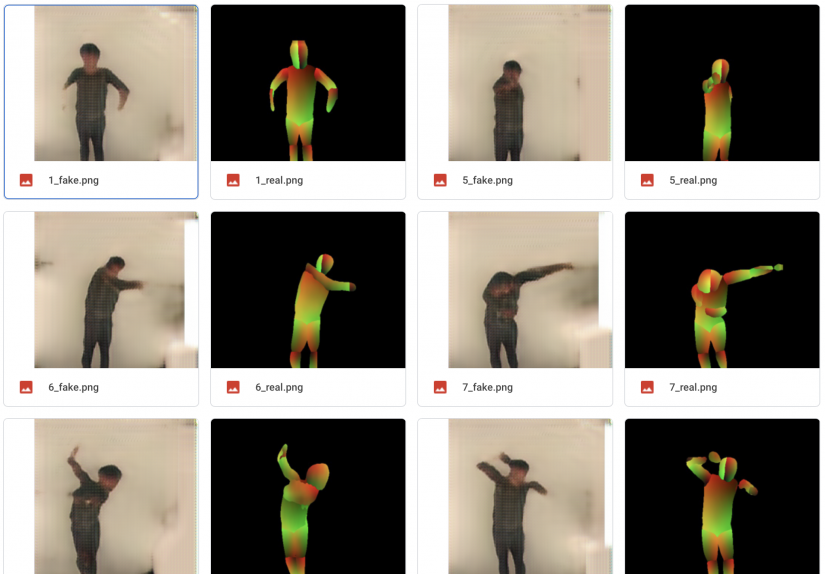

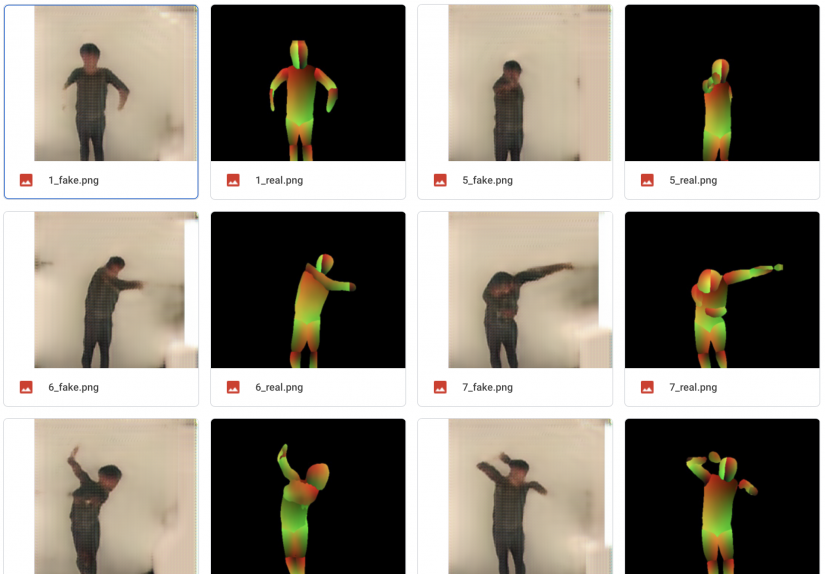

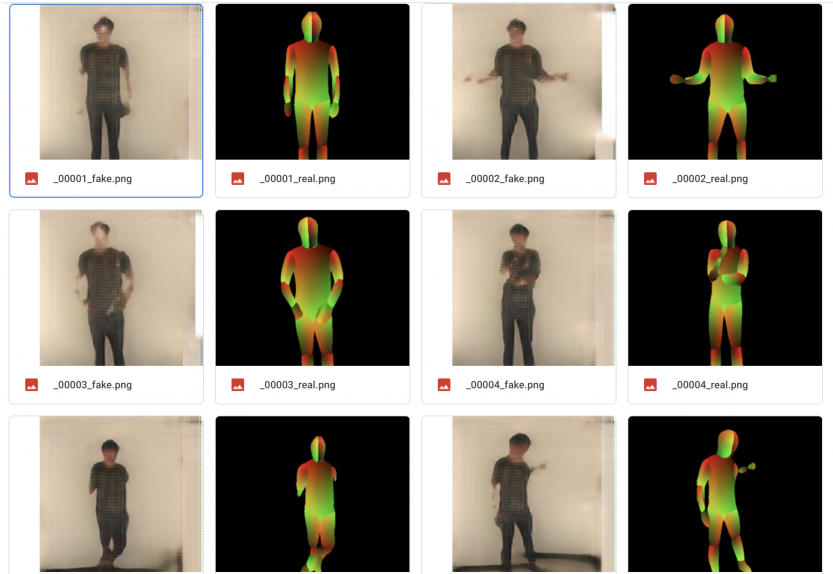

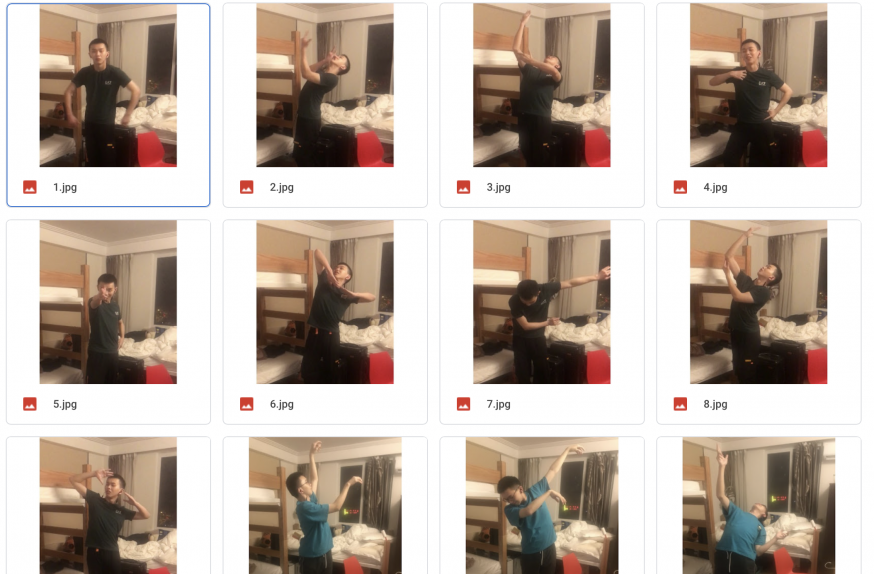

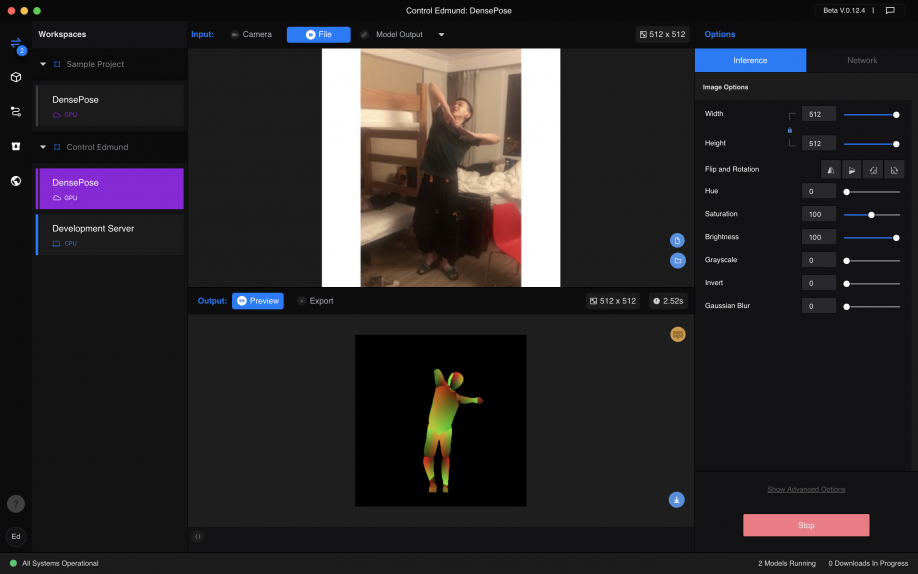

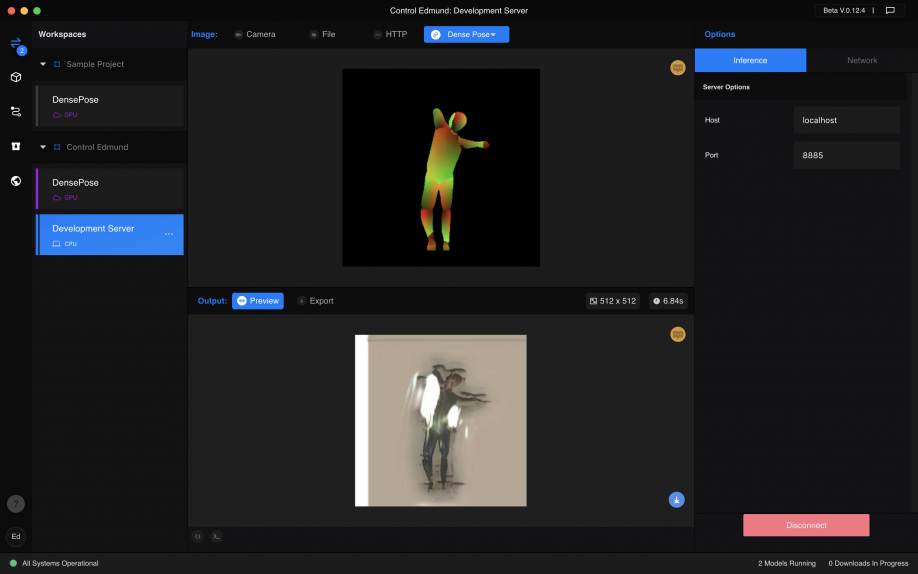

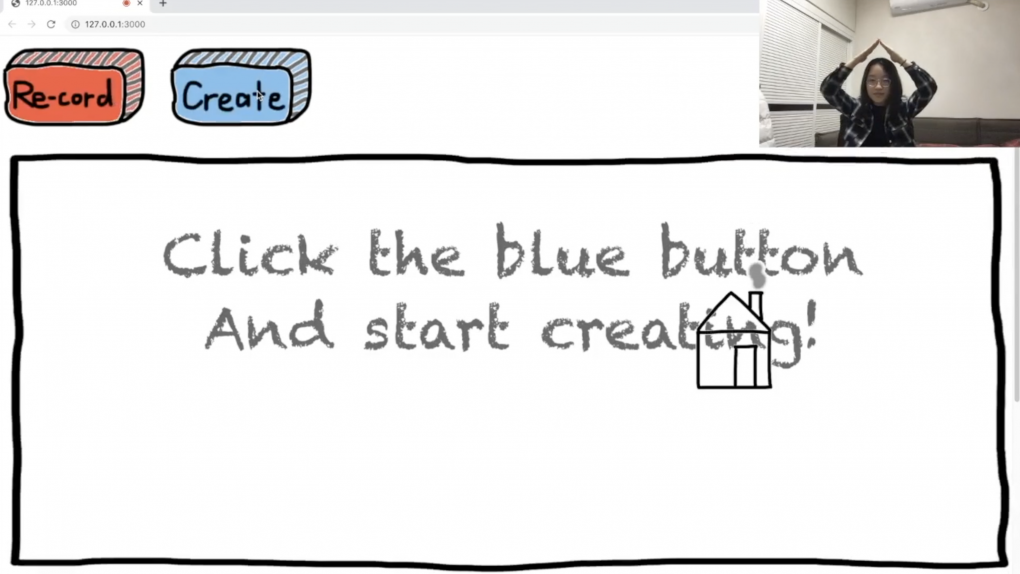

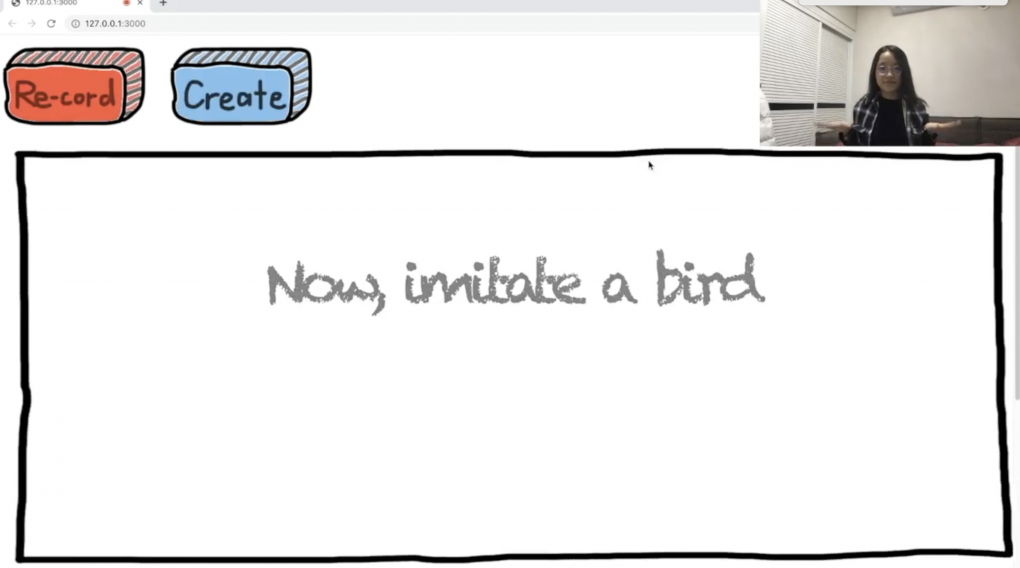

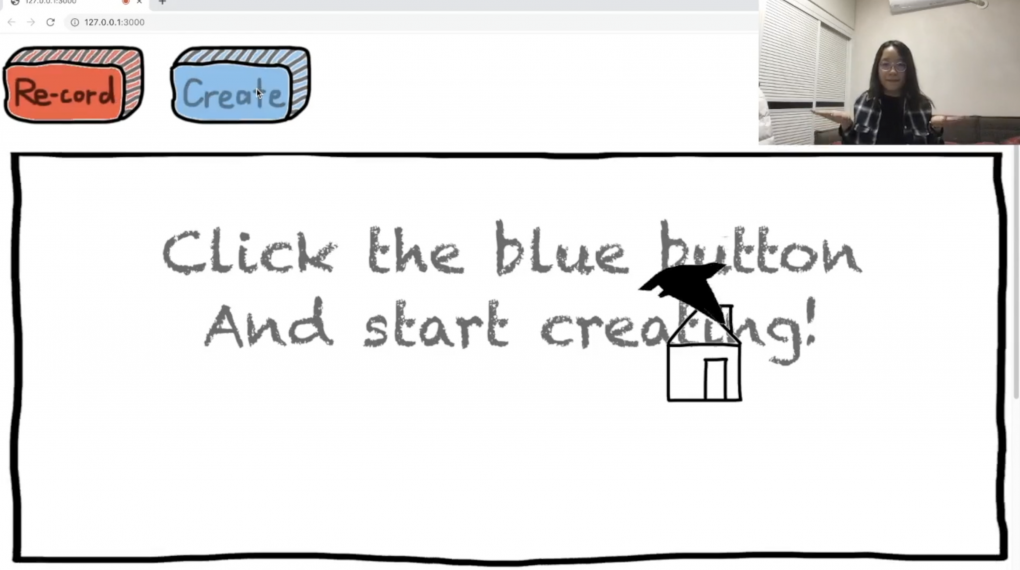

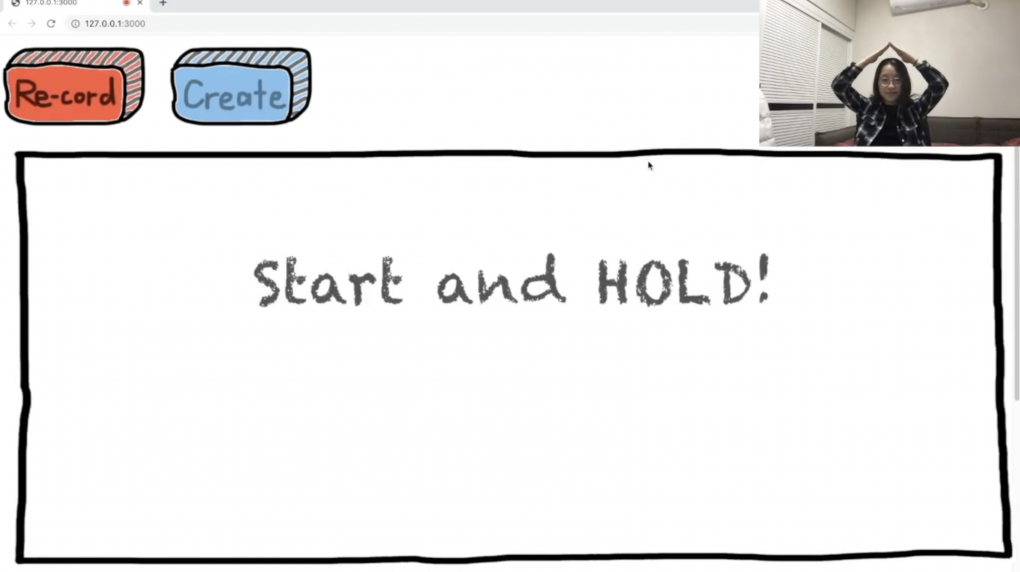

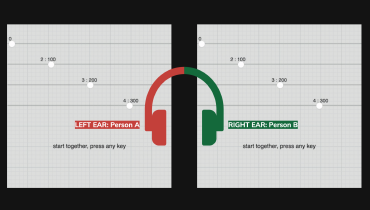

Expression is a p5 sketch that allows you to control musical timbre using body position, along with a built in music synced visual for a song I produced for the Software Music Production course at steinhardt.Tone.js enables a music synced animations and lyric subtitles as well as the ability to assign different stem tracks to their own effect plugins before routing them all into the master track. Posenet from the ml5 machine learning models gives access to body positions which is then passed to manipulate the cutoff values of three lowpass filters each corresponding to a specific track: vocal, drums, or lead.This project originated from a previous project where I used the ml5 model to create a rhythm game, but I decided to add tone.js and change this towards a more musical direction. This can be used for musicians either for production or for live performances, there are countless possibilities for musicians to naturally interact with this program and express themselves in a electronic setting.