On one end of the cam: Viola. On the other ends of the web: all of you. Over a livestream, observe and control Viola's behaviors.

David Currie, Viola He

Description

[VIOLASTREAM 2.0 LIVE]

Wednesday, December 16, 8-10pm EST

Thursday, December 17, 8-10PM EST

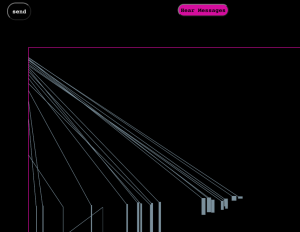

To participate, go to: https://violola.herokuapp.com/

To watch stream, go to: twitch.tv/violola/

>>>>>>>>>>>>>>>>>>>

VIOLASTREAM 2.0 is an online interactive performance where I hand control of my actions over to my audiences, who will collectively vote for my next tasks, movements, emotions, and when to do them.

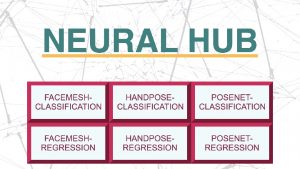

Audiences have access to a webpage with embedded livestream, action choices, and a comment section. This page is connected to my stream, which displays those inputs in real time. While a computer voice speaks to me all your commands, only the highest voted task will be acted out. To stop a current action and trigger the next, you need to vote for “stop task” until it surpasses the vote count that triggers the task.

With performance artists Tehching Hsieh and Marina Abramovic in mind, I'm utilizing web technologies and streaming platforms to explore my own body and identity performance in relations to the others. While the audiences act as commanders and spectators, Viola's body perform the role of the object and machine, creating a cybernetic relationship through webcam and livestream as medium.